Pre-processing

Contents

[hide]Overview[edit]

In terms of data processing, the HFI ground segment handles two types of data, both made available via the MOC:

- telemetry data transmitted from the satellite, coming from the different subsystems of the satellite service module, from the sorption cooler, and from the two instruments;

- auxiliary data, produced by the MOC, with the only three products used by the HFI DPC being the pointing data, the orbit data, and the time correlation data.

All data are retrieved by the HFI Level 1 software and stored in the HFI database.

Telemetry data[edit]

The digitized data from the satellite are assembled on board in packets, according to the ESA Packet Telemetry Standard and Packet Telecommand Standard, the CCSDS Packet Telemetry recommendations, and the ESA Packet Utilization Standard. The packets are dumped to the ground during the Daily Tranmission Control Period, consolidated by and stored at the MOC. Telemetry data contain the housekeeping data and the bolometer (i.e., science) data.

Housekeeping data[edit]

For several reasons (systems monitoring, potential impact of the environment, understanding of the bolometer data), the HFI Level 1 software gathers and stores in its database the satellite subsystems housekeeping parameters:

- Command and Data Management System;

- Attitude Control & Measurement Sub-system;

- Thermal Control System;

- Sorption Cooler System;

- HFI housekeeping parameters.

The structure and the frequency of the packets built by these subsystems and the format of the housekeeping parameters are described in the Mission Information Bases (MIBs). The HFI L1 software uses these MIBs to extract the housekeeping parameters from the packets. Given the status of each subsystem, the parameters are gathered in the HFI database in groups. The HFI L1 software builds in each group a vector of time (usually named TIMESEC) and a single vector per housekeeping parameter.

Bolometer data[edit]

The HFI science data are retrieved and reconstructed as described in section 3.1 of Planck-Early-VI[1].

The first stage of HFI data processing is performed onboard in order to generate the telemetry. This is described in the data compression section.

On board, the signal from the 72 HFI channels is sampled at 180.4Hz by the Read-out Electronic Unit. 254 samples per channel are grouped into a compression slice. The Data Processing Unit then builds a set of several telemetry packets containing the compression slice data and adds to the first packet the start time of the compression slice. When receiving this set of telemetry packets, the L1 software extracts the 72 × 254 samples and computes the time of each sample based on the compression slice start time (digitized with 2-16 s = 15.26μs quantization steps) and a mean sample time between samples. For the nominal instrument configuration, the sample integration time is measured to be Tsamp = 5.54404ms.

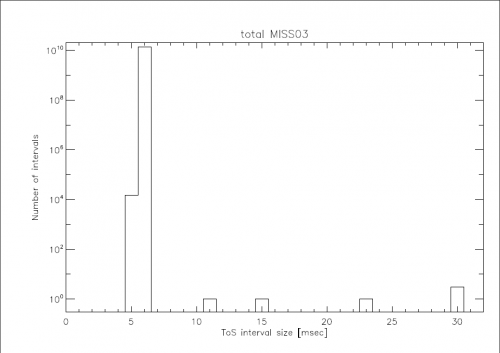

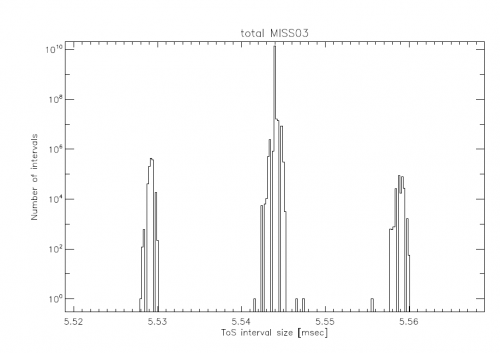

The two plots below show the histogram of time differences between two successive samples for the full mission. Six sample intervals (> 6 ms) correspond to three occurrences of packets lost on board when the on-board software of the Command and Data Management Unit was patched (18, 19, and 20 of August 2009). The right plot is a blow-up of the left one. It shows the distribution around the mean value of 5.54ms and the 15μs quantization step.

Transfer functions[edit]

In order to ease the reading of data in the HFI database, so called transfer functions are created. They allow software items to read data onto which functions are applied on the fly. A simple example of a transfer function is the conversion of thermometer data in analogue-to-digital Units (ADUs) to kelvins. Here we show the two transfer functions applied to the bolometer samples in the data processing pipelines.

From raw signal to non-demodulated signal in volts (transf1_nodemod):

.

The different symbols are described below.

- bc refers to a bolometer usually labelled by its electronic belt and channel, e.g., bc=00 refers to the a part of the first 100GHz polarization sensitive bolometer.

- Cbc is the bolometer sample in ADU.

- Nsample is the number of samples in half a modulation period. This parameter is common to all bolometer channels and (although kept fixed during the whole mission) is read from the housekeeping parameter. Nsample=40.

- Nblanck, bc is the number of samples suppressed at the beginning of each half-modulation period. Although kept fixed during the whole mission, it is read from the housekeeping parameter. For bc=00, Nblanck, 00=0.

- F1bc is a calibration factor. For bc=00, F100≈1.8×107.

- Obc is close to 32768.

- Gamp, ETAL is the gain amplifier measured during the calibration phase. For bc=00, Gamp,ETAL=1.

- Gamp, bc is the current gain amplifier. Although kept fixed during the whole mission, it is read from the housekeeping parameter. For bc=00, Gamp,00=1.

From raw signal to demodulated signal in Volts (transf1):

the same formula as above is used, but with the demodulation and a 3-point filter computed as

,

where

- n-1 and n+1 refer to the samples before and after the given n sample to demodulate,

- "parity" is computed by the HFI L1 software.

Statistics on the telemetry data[edit]

The following table gives some statistics about the data handled at the pre-processing level.

| Nominal mission(1) | Full mission(1) | From launch to the end of full mission(1) | |

|---|---|---|---|

| Duration | 473 days | 884 days | 974 days |

| Number of HFI packets generated onboard (HSK/science)(2) | 19 668 436 / 376 294 615 | 37 762 493 / 704 852 262 | 41 689 4090 / 765 043 713 |

| Number of HFI packets lost (3) (HSK/science) | 2 / 20 | 2 / 20 | 2 / 20 |

| Ratio of HFI lost packets to those generated on board (HSK/science) | 1×10-7 / 5×10-8 | 5×10-8 / 3×10-8 | 5×10-8 / 3×10-8 |

| Number of different housekeeping parameters stored in the database (HFI/SCS/sat) | 3174 / 708 / 12390 | ||

| Number of science samples stored in the database | 530 632 594 653 | 991 929 524 565 | 1 090 125 748 960 |

| Number of missing science samples | 2 537 499 | 6 634 4914 | 7 521 758 |

| Ratio of missing science samples vs samples stored | 5×10-6 | 7×10-6 | 7×10-6 |

- (1) Mission periods are defined on this page.

- (2) Science packets refer to the number of telemetry packets containing science data (i.e., bolometer data and fine thermometer data) when the instrument is in observation mode (Application Program IDentifier = 1412). HSK packets refer to the number of HFI non-essential housekeeping telemetry packets (Application Program IDentifier = 1410).

- (3) All lost packets have been lost on board; no HFI packet was lost at ground segment level.

- (4) This amount of lost science samples is distributed as:

- 0.3% were due to a Single Event Unit;

- 18.5% were lost during the three CDMU patch days and the consecutive clock resynchronization;

- 29.1% were lost because of compression errors;

- 52.1% were lost due to the EndOfSlew buffer overflow being triggered by solar flare events.

Pointing data[edit]

The pointing data are built by the MOC Flight Dynamics team. The pointing data are made available to the DPC via AHF files (see AHF description document and AHF files repository). All data contained in the AHF files are ingested into the HFI database. The present section describes the steps taken to produce the HFI pointing solution.

- During the stable pointing period (i.e., during the dwell), the data sampling rate is 8Hz, while it is 4Hz during the satellite slews. Those data are thus interpolated to the bolometer sampling rate using a spherical linear interpolation, as described in section 3.4 of of Planck-Early-VI[1].

- The pointing solution is then amended from the "wobble effect," as delivered by MOC Flight Dynamics in the AHF files.

- A final correction is then applied based on the study of the main planets and point sources seen by the bolometers.

Note: an "HFI ring" corresponds to each stable pointing period, when the spin axis is pointing towards an essentially fixed direction in the sky and the detectors repeatedly scan the same circle on the sky. More precisely the HFI ring start time is defined as the time of the first thruster firing. The end time of the HFI ring is the start time of the following ring.

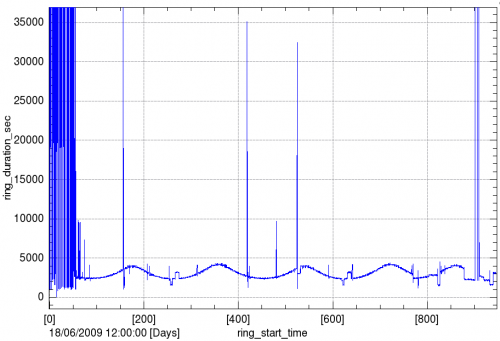

The plot below shows the evolution of the ring duration along the whole mission. It mainly reflects the scanning strategy and few long rings arising due to operational constraints or tests.

Orbit data[edit]

Satellite orbit velocity is built by the MOC Flight Dynamics team and made available to the HFI DPC via orbit files (see Orbit description document and

orbit files repository).

Since those orbit files contain both effective and predictive data, they are regularly ingested and updated in the HFI database.

Note: these same data are also ingested in parallel into the JPL Horizons system under ESA’s responsibility.

The satellite orbit data preprocessing has the following steps.

- The sampling of the MOC-provided orbit velocity data is approximately once every 5 min. These data are interpolated to the time of the middle of the HFI rings.

- The reference frame of the orbit data is translated from the MOC-given Earth Mean Equator and Equinox J2000 (EME2000) reference frame to the Ecliptic reference frame in Cartesian coordinates.

- The Earth velocity provided by the NASA JPL Horizons system is interpolated to the time of the middle of the HFI rings. It is then added to the satellite velocity data.

The use of the satellite orbit velocity is three-fold:

- computation of the CMB orbital dipole (see calibration section);

- computation of the positions of solar system objects (see data masking section);

- computation of the aberration correction.

Time correlation data[edit]

The MOC is responsible for providing information about the relationship between the satellite On Board Time (OBT) and Coordinated Universal Time (UTC). This information comes via several measurements of OBT and UTC pairs each day, measured during the satellite ranging (see Time Correlation document).

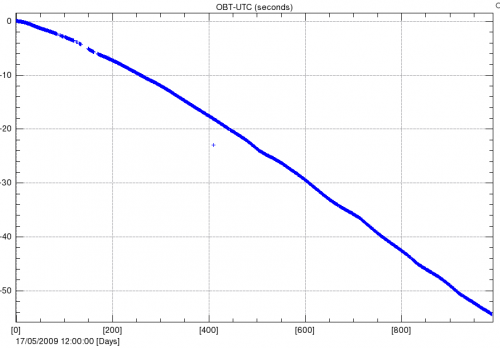

The following plot shows the (OBT, UTC) data couples provided by MOC since Planck launch. The x-axis is in number of days since the 17 May 2009, while, the y-axis is the difference of OBT and UTC in seconds. The very slow drift is approximately 0.05 seconds per day. The small wiggles come from the satellite global temperature trends, due to its orbit and distance to the Sun.

Note: The isolated point on 1 June 2010 (just above 400 in the abscissa) is not of any consequence, being due to a misconfiguration of the ground station parameters.

A third-order polynomial is then fitted to the data. As the whole HFI data management and processing uses OBT, the OBT-UTC information is only used when importing orbit data from Horizons.

References[edit]

- ↑ Jump up to: 1.01.1 Planck early results. VI. The High Frequency Instrument data processing, Planck HFI Core Team, A&A, 536, A6, (2011).

(Planck) High Frequency Instrument

[ESA's] Mission Operation Center [Darmstadt, Germany]

Data Processing Center

European Space Agency

House-Keeping data

Sorption Cooler Subsystem (Planck)

Command and Data Management Unit

Attitude History File

Cosmic Microwave background

On-Board Time

Universal Time Coordinate(d)