Difference between revisions of "TOI processing LFI"

(→Spikes Removal) |

(→Fitting pipeline) |

||

| (116 intermediate revisions by 4 users not shown) | |||

| Line 2: | Line 2: | ||

==Overview== | ==Overview== | ||

| − | The LFI | + | The LFI Level 2 Pipeline analyses data from each horn of the instrument separately, one pointing period at a time, and stores the results in an object the length of an OD. Each diode of the horn is corrected for systematic effects. Next, measurements of the sky and the 4-K load are differenced, then the signals from one diode are combined with signals from the complementary diode in the same radiometer. Finally, photometric calibration is applied for each horn. |

===Pre-processing=== | ===Pre-processing=== | ||

| Line 8: | Line 8: | ||

Before the Level 2 pipeline is run, the Mission information and data sampling divisions are stored in the database, in order to improve the analysis. | Before the Level 2 pipeline is run, the Mission information and data sampling divisions are stored in the database, in order to improve the analysis. | ||

| − | The Mission information is a set of objects, one for each Operational Day (OD, as defined in the [[glossary]]), in which are stored pointing period data: | + | The Mission information is a set of objects, one for each Operational Day (OD, as defined in the [[glossary]]), in which are stored pointing period data: the DPC pointing ID (where 1 is the first pointing of the nominal mission); PSO pointing ID; start OBT of the pointing manoeuvre; start OBT of the stable pointing period; OBT of the end of the stable pointing period; and spin axis ecliptic longitude and latitude. |

| − | The sampling information is a set of objects, one for each LFI frequency, in which are stored for each pointing ID: start OBT of the pointing | + | The sampling information is a set of objects, one for each LFI frequency, in which are stored for each pointing ID: the start OBT of the pointing manoeuvre; th start OBT of the stable pointing period; the end OBT of the pointing period; the number of samples of the pointing, number of stable samples of the pointing; the starting sample of the stable pointing period; and the sample number from the start of the nominal mission. Valid samples and OBTs are defined to be those where any of the radiometers in a frequency band contain valid data. |

| − | ==ADC | + | ==ADC correction== |

| − | During the analysis of LFI date, | + | During the analysis of LFI date, a systematic effect was discovered that was common to both white noise and calibration. This turned out to be a nonlinearity in the analogue-to-digital converter (ADC) on board, as discussed further in {{PlanckPapers|planck2013-p02}}, {{PlanckPapers|planck2013-p02a}}, {{PlanckPapers|planck2014-a03||Planck-2015-A03}}, {{PlanckPapers|planck2014-a04||Planck-2015-A04}} and {{PlanckPapers|planck2016-l02}}. |

===Evaluation=== | ===Evaluation=== | ||

| Line 20: | Line 20: | ||

The mathematical model represents the digital ADC output as: | The mathematical model represents the digital ADC output as: | ||

| − | :<math> X = (V - \Delta) \gamma + x_0 </math> | + | :<math> X = (V - \Delta) \gamma + x_0 </math>, |

| − | where < | + | where <i>V</i> is the voltage input, γ is the DAE gain, Δ is the DAE offset and <i>x</i><sub>0</sub> is the DAE <i>T</i><sub>zero</sub>. |

| − | We can model the non-linearity as a function of the input voltage < | + | We can model the non-linearity as a function of the input voltage <i>R</i>(<i>V</i>). So we have the apparent inferred voltage <i>V'</i> and we can link it to the actual input voltage with: |

| − | :<math> | + | :<math> \{(V - \Delta) \gamma + x_0\} R(V) = X' = (V' - \Delta) \gamma + x_0 </math>, |

| − | so that | + | so that |

| − | :<math> R(V) = (V' - \Delta) \gamma + x_0 \over (V - \Delta) \gamma + x_0 </math> | + | :<math> R(V) = (V' - \Delta) \gamma + x_0 \over (V - \Delta) \gamma + x_0 </math>. |

| − | Since < | + | Since <i>V</i> >> Δ and <i>V</i> γ >> <i>x</i><sub>0</sub>, we can use the much simpler relation |

| − | :<math> R(V) = \frac{V'}{V} </math> | + | :<math> R(V) = \frac{V'}{V} </math>, |

| − | and we expect | + | and we expect this to be very near to unity for all <i>V</i>. |

| − | To find the response curve we have only the apparent voltage to work with, so we had to use the inverse response function < | + | To find the response curve we have only the apparent voltage to work with, so we had to use the inverse response function <i>R'</i>(<i>V'</i>) and replace the real input voltage with <i>T</i><sub>sys</sub> times the time varying gain factor <i>G</i>(<i>t</i>): |

| − | :<math> V = V'R'(V') = G(t)T_{sys} </math> | + | :<math> V = V'R'(V') = G(t)T_{\rm sys} </math>. |

| − | If we introduce a small signal on top of < | + | If we introduce a small signal on top of <i>T</i><sub>sys</sub>, which leads to increased detected voltage and corresponding apparent voltage increment, then |

| − | :<math> V + \delta V = (V' + \delta V') R' (V' + \delta V') = G(t) (T_{sys} + \delta T) </math> | + | :<math> V + \delta V = (V' + \delta V')\,R' (V' + \delta V') = G(t) (T_{\rm sys} + \delta T) </math>, |

| − | so carrying out the differentiation with respect to < | + | so carrying out the differentiation with respect to <i>V'</i> of the relation between true and apparent signal voltage leads to |

| − | :<math> \delta V = \left | + | :<math> \delta V = \left\{ V' {dR'(V') \over dV'} + R'(V') \right\} \delta V' = G(t) \delta T </math>. |

| − | We now assume < | + | We now assume <i>T</i><sub>sys</sub> and δ<i>T</i> are fixed and that the variations are due to slow drifts in the gain. Then we can isolate the terms |

| − | :<math> V' = {G(t) T_{sys} \over R'(V')} </math> | + | :<math> V' = {G(t) T_{\rm sys} \over R'(V')} </math>, |

| − | :<math> \delta V' = {G(t) \delta T \over V' {dR'(V') \over dV} + R'(V')} </math> | + | :<math> \delta V' = {G(t) \delta T \over V' {dR'(V') \over dV} + R'(V')} </math>. |

| − | Combining the equations | + | Combining the equations to remove the gain factor, we have |

| − | :<math> {V' R'(V') \over T_{sys}} = {\delta V' {dR'(V') \over dV'} + R'(V') \over \delta T } </math> | + | :<math> {V' R'(V') \over T_{\rm sys}} = {\delta V' \left\{{V'dR'(V') \over dV'} + R'(V')\right\} \over \delta T } </math>. |

| − | Rearranging and | + | Rearranging and setting <i>a</i> = δ<i>T</i>/<i>T</i><sub>sys</sub> gives |

| − | :<math> {dR'(V') \over dV' } = \left( {a \over | + | :<math> {dR'(V') \over dV' } = \left( {a V'\over \delta V'} - {1 \over V'} \right) R'(V') </math>. |

| − | + | We see the expected direct proportionality of δ<i>V'</i> to <i>V'</i>, based on the assumption that the variations in voltage are due to overall gain drift, so that the amplitude of voltage and signal will vary together. Then there is the additional differential term, which will pull the signal amplitude away from the linear relationship. So if we plot measured white noise or dipole gain factor against recovered voltage, we should see a linear curve with variations due to local slope changes at particular voltages. The linear part can be taken out and the differential part fitted. This was numerically integrated to obtain the inverse response curve, which is then used to convert the measured voltages to corrected voltages. | |

===Application=== | ===Application=== | ||

| − | + | In the 2015 release we fit white noise amplitudes binned with respect to detector voltage with a simple spline curve and we translate results into a correction curve as described in {{PlanckPapers|planck2014-a03||Planck-2015-A03}}. Instead, the fit now relies on a more physically motivated function based on voltage steps (see {{PlanckPapers|planck2016-l02}} for futher details). | |

| + | We compared the results between this method and the previous one by means of null-maps, checking the consistency of the resulting new gain solution with the new ADC correction applied. This was done by computing the rms scatter from the 8 different survey maps taking into account pixel hits and zero levels. A ``goodness" parameter can then be derived from the mean level of the | ||

| + | masked null-map made between these survey scatter maps. | ||

| + | The 70 and 44 GHz null-maps failed to show significant improvements but this was not the case at 30 GHz. Inspecting the ADC solutions it became clear that the higher noise per radiometer and low ADC non-linearity at 70 GHz did not allow for a good fit. Meanwhile the ADC effect was so large at 44 GHz that new model could not reproduce some of the details, and so led to some small residuals. | ||

| + | The 30 GHz system has much lower noise and less thermal drift in the gain, meaning that more voltage levels were revisited more | ||

| + | often thus yielding a more consistent ADC model curve. It was therefore decided to keep this new solution only for the 30 GHz channels. The other two channels thus have the same correction for ADC non-linearity as in the previous release. | ||

| − | + | ==Spike removal== | |

| − | + | Some of the LFI receivers exhibit a small artefact with exactly 1-s period, that produces effects visible in the power spectra. The effect is a set of spikes at 1 Hz and harmonics. The spurious signal can be well modelled and is removed from the timelines. More information can be found in {{PlanckPapers|planck2013-p02}}, {{PlanckPapers|planck2013-p02a}}, {{PlanckPapers|planck2014-a03||Planck-2015-A03}}, {{PlanckPapers|planck2014-a04||Planck-2015-A04}} and {{PlanckPapers|planck2016-l02}}. | |

| − | + | ===Modelling=== | |

| − | + | The cause of the spikes at 1 Hz and harmonics is a tiny 1-s square-wave embedded in signals from the affected channels. The method used to estimate the 1-Hz signal is to build a template in the time domain, synchronized with the spurious signal. The first step is to divide each second of data into time bins using OBT. The number of bins is computed using | |

| − | + | :<math> N_{\rm bins} = f_{\rm samp} \times template\_resolution</math> | |

| − | + | where <i>f</i><sub>samp</sub> is the sampling frequency and is 136 Hz at 70 GHz, 80 at 44 GHz, and 56 at 30 GHz. Then the vector of bins is initialized with time intervals. To avoid aliasing effects, the template resolution is √3. | |

| + | We can write the process adding indices to the time sample; here a lower index denotes the particular time sample, while the upper index labels the bin into which the sample falls. The linear filter can be written as | ||

| − | + | :<math> s(t_{i}^{j}) = a_j \left\{1- \Delta x (t_{i}^{j}) \right\} + a_{j+1} \Delta x (t_{i}^{j})</math>. | |

| − | |||

| − | |||

| − | + | Here Δ<i>x</i>(<i>t</i><sub>i</sub><sup></sup>) is the filter weight, which is determined by where the sample lies within the bin. If we use <i>t</i><sup>j</sup> with only an upper index to denote the start of each bin, then we can write the filter weight as | |

| − | + | :<math> \Delta x (t_{i}^{j}) = {{{t_i^j - t^j} \over {t^{j+1} - t^j}}} </math>. | |

| − | |||

| − | :<math> \Delta x (t_{i}^{j}) = {{{t_i^j - t^j} \over {t^{j+1} - t^j}}} </math> | ||

In other words, the filter weight is the time sample value minus the start of the bin divided by the width of the bin. | In other words, the filter weight is the time sample value minus the start of the bin divided by the width of the bin. | ||

| − | We must estimate the parameters < | + | We must estimate the parameters <i>a</i><sub>j</sub> from the data. With the assumption that the instrument has stable noise properties, we can use a least squares algorithm to estimate the bin values: |

| − | :<math> {\partial \over \partial a_k} \sum_{i,j} \left( s(t_i^j) – d_i^j \right)^2 = 0 </math> | + | :<math> {\partial \over \partial a_k} \sum_{i,j} \left( s(t_i^j) – d_i^j \right)^2 = 0 </math>. |

| − | This can be represented in matrix equation | + | This can be represented in the matrix equation |

| − | :<math> | + | :<math> {\sf M}_{jk}a_k = b_j </math>, |

with the following definitions: | with the following definitions: | ||

| − | :<math> | + | :<math> {\sf M}_{k,k-1} = \sum_i \left\{1 - \Delta x (t_i^{k-1})\right\} \Delta x (t_i^{k-1}) </math>; |

| − | :<math> | + | :<math> {\sf M}_{k,k} = \sum_i \left\{1 - \Delta x (t_i^k)\right\}^2 \Delta x (t_i^{k-1})^2 </math>; |

| − | :<math> | + | :<math> {\sf M}_{k,k+1} = \sum_i \left\{1 - \Delta x (t_i^k)\right\} \Delta x (t_i^k) </math>; |

| − | :<math> | + | :<math> {\sf M}_{k,k+n} (|n| > 1) = 0 </math>; |

| − | :<math> b_j = \sum_i d_i^k | + | :<math> b_j = \sum_i d_i^k \left\{1- \Delta x (t_k^i)\right\} + d_i^{k-1}\Delta x (t_i^{k-1}) </math>. |

| − | With these definitions we have to make use of periodic boundary conditions to obtain the correct results, such that if < | + | With these definitions we have to make use of periodic boundary conditions to obtain the correct results, such that if <i>k</i> = 0, then <i>k</i>-1 = <i>n</i>-1 and if <i>k</i> = <i>n</i>-1, then <i>k</i>+1 = 0. Once this is done, we have a symmetric tridiagonal matrix with additional values at the upper right and lower left corners. The matrix can be solved with LU decomposition. In order to be certain of the numerical accuracy of the result, we can perform a simple iteration. The solving of the linear system and the iterative improvement of the solution are implemented as suggested in Numerical Recipes (Press et al., Cambridge University Press, 2007). |

===Application=== | ===Application=== | ||

| − | For each of the 44 LFI diodes there is | + | For each of the 44 LFI diodes there is a corresponding object in the Database. After studying the amplitude of the spikes at other LFI frequencies, we chose to apply the correction only to the 44 GHz radiometers. Each object contains three columns: the bins start time vector; the sky amplitudes; and the reference amplitudes. |

| − | For each sample the value to be subtracted is computed using | + | For each sample the value to be subtracted is computed using |

| − | :<math> V = | + | :<math> V = A^{\rm sky}_k (1 - \Delta x (t_k)) + A^{\rm sky}_{k+1} \Delta x (t_k) </math>, |

| − | where k is the index of the bins at a given time. | + | where <i>k</i> is the index of the bins at a given time. |

| − | == | + | ==Filling gaps in the data== |

| − | During the mission | + | During the mission, a small number of data packets were lost ({{PlanckPapers|planck2013-p02}}, {{PlanckPapers|planck2014-a03||Planck-2015-A03}} and {{PlanckPapers|planck2016-l02}}). Moreover, in two different and very peculiar situations, LFI was shut down and restarted, giving inconsistencies in data sampling. None of these data are used for scientific purposes, but to avoid discrepancies in data analysis all of the radiometers at the same frequency must have the same number of samples. |

| − | To | + | To ensure this, we compare the length of the data stream to be reduced in a specific pointing period to the data stored in the sample information object. If the length is not the same, the OBT vector is filled with missing sample times, the data vector is filled with zeros, and in the flag column the bit for "gap" is entered. |

| − | ==Gain | + | ==Gain modulation factor== |

| − | The pseudo-correlation design of the LFI radiometers allows a dramatic reduction of < | + | The pseudo-correlation design of the LFI radiometers allows for a dramatic reduction of 1/<i>f</i> noise when the <i>V</i><sub>sky</sub> and <i>V</i><sub>load</sub> outputs are differenced (see [[LFI design, qualification, and performance#Overview|LFI instrument]] description). The two streams are slightly unbalanced, since one looks at the 2.7-K sky and the other looks at the 4.5-K reference load. To force the mean of the difference to zero, the load signal is multiplied by the gain modulation factor (<i>R</i>). For each pointing period this factor is computed using (see equation (3) in [[LFI design, qualification, and performance#Radiometer Chain Assembly (RCA)|LFI]] description) |

| − | :<math> R = { | + | :<math> R = { \left\langle V_{\rm sky}\right\rangle \over \left\langle V_{\rm load}\right\rangle} </math>. |

| − | Then the data are differenced using | + | Then the data are differenced using |

| − | :<math> | + | :<math> {\rm TOI}_{\rm diff}^i = V_{\rm sky}^i – R V_{\rm load}^i </math>. |

| − | This value for R minimizes the 1/f and the white noise in the difference timestream. The i index represents the diode and can be 0 or 1. | + | This value for <i>R</i> minimizes both the 1/<i>f</i> noise and the white noise in the difference timestream. The <i>i</i> index represents the diode and can be 0 or 1. |

| − | At this point the | + | At this point, we also set the manoeuvre flag bit to identify those samples that have missing data, using the information stored in the sampling information object. This identifies which data to ignore in the next step of the Pipeline. |

| − | The R values are stored in the database. At the same time the mean values of < | + | The <i>R</i> values are stored in the database. At the same time the mean values of <i>V</i><sub>sky</sub> and <i>V</i><sub>load</sub> are stored, so they can be used in other steps of the analysis. |

| − | ==Diode | + | ==Diode combination== |

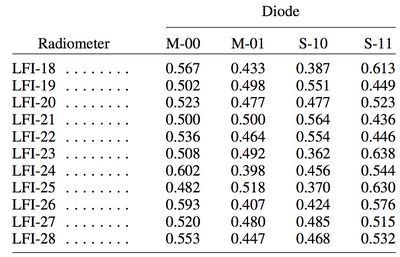

| − | The two complementary diodes of each radiometer are combined. The relative weights of the diodes in the combination are chosen for optimal noise. We assign relative weights to the uncalibrated diode streams based on their first order calibrated noise. | + | The two complementary diodes of each radiometer are combined. The relative weights of the diodes in the combination are chosen for optimal noise. We assign relative weights to the uncalibrated diode streams based on their first-order calibrated noise. |

===Evaluation=== | ===Evaluation=== | ||

| − | From first order calibration we compute an absolute gain < | + | From first-order calibration we compute an absolute gain <i>G</i><sub>0</sub> and <i>G</i><sub>1</sub>, subtract an estimated sky signal and calculate the calibrated white noise σ<sub>0</sub> and σ<sub>1</sub>, for the pair of diodes (see equation 6 in [[LFI design, qualification, and performance#Radiometer Chain Assembly (RCA)|LFI]] description). The weights for the two diodes (<i>i</i> = 0 or 1) are |

| − | :<math> W_i = {\sigma_i^2 \over G_{01}} {1 \over {\sigma_0^2 + \sigma_1^2}} </math> | + | :<math> W_i = {\sigma_i^2 \over G_{01}} {1 \over {\sigma_0^2 + \sigma_1^2}} </math>, |

| − | where the weighted calibration constant is given by | + | where the weighted calibration constant is given by |

| − | :<math> G_{01} = {1 \over {\sigma_0^2 + \sigma_1^2}} | + | :<math> G_{01} = {1 \over {\sigma_0^2 + \sigma_1^2}} \left(G_0 \sigma_1^2 + G_1 \sigma_0^2\right) </math>. |

| − | The weights are fixed to a single value per diode for the entire | + | The weights are fixed to a single value per diode for the entire data set. Small variations in the relative noise of the diodes would in principle require that we recalculate the weights on shorter timescales; however, we decided that a time-varying weight could possibly induce more significant (and hard to model) systematics, so we chose a single best estimate for the weights for each diode pair. |

| − | + | [[File:LFI_weights.jpg|400px]] | |

| − | + | :'''<small>Weights used in combining diodes.</small>''' | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | | | ||

| − | |||

| − | |||

| − | |||

===Application=== | ===Application=== | ||

| − | The weights in the table above are used in the formula | + | The weights in the table above are used in the formula |

| − | :<math> | + | :<math> {\rm TOI}_{\rm diff} = w_0 {\rm TOI}_{\rm diff,0} + w_1 {\rm TOI}_{\rm diff,1} </math>. |

| − | ==Planet | + | ==Planet flagging== |

<!-- | <!-- | ||

| Line 202: | Line 175: | ||

--> | --> | ||

| − | ===Extraction | + | ===Extraction method=== |

| − | + | Measurements of planets have been formed from samples containing flux from the planet, plus a surrounding region, projected onto a grid (microstripes), by assuming an elliptical Gaussian beam using parameters from instrument database. | |

| − | Microstripes are a way to extract and store relevant samples for | + | Microstripes are a way to extract and store relevant samples for planet detection. Here relevant samples are those affected by the planet plus samples in the neighbourhood (to establish a background level). The search radius for selecting samples as relevant is 5° around the planet's position, computed at the pointing period mid-time. For each sample we store SCET (Spacecraft Event Time), pointing directions, and calibrated temperature. Destriping is applied during the application of this procedure. |

| − | Random errors are estimated by taking the variance of samples entering each micromap pixel. This is fast | + | Random errors are estimated by taking the variance of samples entering each micromap pixel. This method is fast but not exact, since the variance is larger near a bright source. This can cause the noise to be overestimated by a factor of 2; however, given the large S/N for planetary observations, that is not a major drawback. |

| − | The apparent position of | + | The apparent position of a planet as seen from Planck at a given time is derived from [http://ssd.jpl.nasa.gov/?horizons JPL Horizons]. Positions are tabulated in steps of 15 minutes and then linearly interpolated at the sampling frequency of each detector. JPL Horizons tables allow us also to derive other quantities such as the planet-Planck distance and the planet-Sun distance, as well as the planet angular diameter, which affects the apparent brightness of the planet. |

| − | The antenna temperature is a function of the dilution factor, according to | + | The antenna temperature is a function of the dilution factor, according to |

| − | :<math> T_{ant,obs} = 4 log | + | :<math> T_{\rm ant,obs} = 4 \log 2\,T_{\rm ant,1} \left( {\theta \over b_{\rm FWHM} } \right) ^2 </math>, |

| − | where < | + | where <i>T</i><sub>ant,obs</sub> and <i>T</i><sub>ant,1</sub> are the observed and reduced <i>T</i><sub>ant</sub>, θ is the instantaneous angular diameter of the planet, and <i>b</i><sub>FWHM</sub> the beam full-width at half-maximum. |

| − | With the above definition < | + | With the above definition <i>T</i><sub>ant,1</sub> could be considered as the <i>T</i><sub>ant</sub> for a planet with <i>b</i><sub>FWHM</sub> = θ, but a more convenient view is to take a reference dilution factor <i>D</i><sub>0</sub>, as the dilution factor for a standardized angular diameter for the planet and fiducial FWHM beam <i>b</i><sub>FWHM</sub>, θ<sub>0</sub>, to have |

| − | :<math> D_0 = \left( {\theta_0 \over b_0 } \right) ^2 </math> | + | :<math> D_0 = \left( {\theta_0 \over b_0 } \right) ^2 </math>, |

| − | leading to the following definition of a standardized < | + | leading to the following definition of a standardized <i>T</i><sub>ant</sub>: |

| − | :<math> T_{ant,obs} = 4 log 2 T_{ant,0} \left( {b_{ | + | :<math> T_{\rm ant,obs} = 4 \log 2\, T_{\rm ant,0} \left( {b_{\rm FWHM,0} \over b_{\rm FWHM}} {\theta \over \theta_0} \right) ^2 </math>. |

| − | + | This has the advantage of removing variations among different detectors and transits, while keeping the value of <i>T</i><sub>ant</sub> similar to that seen by the instrument and then allowing a prompt comparison of signals and sensitivities. | |

===Application=== | ===Application=== | ||

| − | The OBT | + | The OBT vectors found by the searches are saved in a set of objects, one for each horn. In the Level 2 pipeline those OBTs are compared with the OBT vector of the data to set the planet bit flag where needed. |

| + | |||

| + | ==Photometric calibration== | ||

| + | |||

| + | Photometric calibration is the procedure used to convert data from volts to kelvins. The source of the calibration is the well understood CMB dipole, caused by the motion of the Solar System with respect to the CMB reference frame. To this signal we add the modulation induced by the orbital motion of Planck around the Sun. The resulting signal is then convoluted with the horn beam to obtain the observed dipole. For details refer to {{PlanckPapers|planck2014-a06||Planck-2015-A06}} and {{PlanckPapers|planck2016-l02}}. | ||

| + | |||

| + | In the 2018 release we decided to include the sky signal in the calibration process, on the same footing as the orbital and Solar dipole, including both temperature and polarization fluctuations. | ||

| + | Unfortunately, this is non-trivial, since the purpose of the experiment, in this case, is precisely to measure the polarized emission from the sky. A good approximation, however, can be estalished through an iterative process that alternates between gain calibration, map making and astrophysical component separation: | ||

| − | + | # Let T(sky) be the full best-fit ''Planck''2015 astrophysical sky model, including CMB, synchrotron, free-free, thermal and spinning dust and CO emission for temperature maps, and CMB, synchrotron and thermal dust in polarization. | |

| + | # Estimate ''G'' explicitly including the temperature and polarization component of T(sky) in the calibrator on the same footing as teh orbital and Solar Dipole. | ||

| + | # Compute frequency maps with these new gains. | ||

| + | # Estimate new astrophysical model from updated frequency maps using Commander. (At the moment the sky model is adjusted only for LFI frequencies.) | ||

| + | # Iterate (2)--(4). | ||

| − | + | Since the true sky signal is stationary on the sky, while the spurious gain fluctuations are not, this process will in principle converge, essentially corresponding to a generalized map-maker in which the G is estimated jointly with the sky maps (see {{PlanckPapers|planck2016-l02}} for further details). | |

| − | |||

| − | + | ===Beam-convolved dipole=== | |

| − | + | In computing the beam-convolved dipole we used an elegant algorithm to save time and computing power. In computing the cosmological dipole signal it is common to assume a pencil-like beam acting as a Dirac delta function. In this case a dipole timeline is defined as | |

| − | + | :<math> \Delta T_{D,\delta}(t) = \mathbf{P}_{\rm E}(t) \cdot \mathbf{D}_{\rm E} </math> | |

| − | + | where <b>P</b><sub>E</sub>(<i>t</i>) is the pointing direction in the observer reference frame and <b>D</b><sub>E</sub>(<i>t</i>) is the dipole axis scaled by the dipole amplitude, again in the same reference frame (here the label "E" is for "Ecliptic"). | |

| − | + | In general the true signal would have to be convolved with the beam pattern of the given radiometer, usually described as a fixed map in the beam reference frame or as a time-dependent map in the observer reference frame. In this case it is easiest to describe the convolution in the beam reference frame, since the function to be convolved then can be described by a single vector. | |

| − | + | Denoting by <math> {\sf U}(t) </math> the matrix converting from the observer to the beam reference frame, so that | |

| − | + | :<math> {\sf U}(t) \mathbf{P}_{\rm E}(t) = \mathbf{e}_z </math>, | |

| − | + | the instantaneous dipole direction in the beam reference frame is | |

| − | + | :<math> \mathbf{D}(t) = {\sf U}(t) \mathbf{D}_{\rm E} </math>. | |

| − | + | By denoting with <math> \mathbf{P} </math> a pointing direction in the beam reference frame, then | |

| − | + | :<math> \Delta T_D(t) = N \int_{4\pi} B(\mathbf{P})\,\mathbf{P} \cdot \mathbf{D}(t)\, d^3\mathbf{P} </math>, | |

| − | + | where <i>N</i> is a normalization constant defined through | |

| − | + | :<math> N^{-1} = \int_{4\pi} B(\mathbf{P}) d^3\mathbf{P} </math>. | |

| − | + | Denoting with <i>P<sub>x</sub></i>, <i>P<sub>y</sub></i>, and <i>P<sub>z</sub></i> the three Cartesian components of <b>P</b>, the integral of the dot product can be decomposed into three independent integrals: | |

| − | |||

| − | |||

| − | + | :<math> S_x = N \int_{4\pi} B(\mathbf{P}) P_x d^3\mathbf{P} </math>; | |

| + | :<math> S_y = N \int_{4\pi} B(\mathbf{P}) P_y d^3\mathbf{P} </math>; | ||

| + | :<math> S_z = N \int_{4\pi} B(\mathbf{P}) P_z d^3\mathbf{P} </math>. | ||

| + | |||

| + | These integrals define a time independent vector characteristic of each radiometer and constant over the mission. | ||

{| border="1" cellpadding="5" cellspacing="0" align="center" | {| border="1" cellpadding="5" cellspacing="0" align="center" | ||

| Line 277: | Line 262: | ||

! <math> S_z </math> | ! <math> S_z </math> | ||

|- | |- | ||

| − | | LFI18S || 4. | + | | LFI18S || 4.64653×10<sup>-3</sup> || -1.32712×10<sup>-3</sup> || 9.95771×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI18M || 2. | + | | LFI18M || 2.84449×10<sup>-3</sup> || -8.95117×10<sup>-4</sup> || 9.97353×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI19S || 4. | + | | LFI19S || 4.29488×10<sup>-3</sup> || -1.40378×10<sup>-3</sup> || 9.96128×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI19M || 4. | + | | LFI19M || 4.50970×10<sup>-3</sup> || -1.62551×10<sup>-3</sup> || 9.95733×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI20S || 5. | + | | LFI20S || 5.27978×10<sup>-3</sup> || -1.94793×10<sup>-3</sup> || 9.95146×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI20M || 4. | + | | LFI20M || 4.74206×10<sup>-3</sup> || -1.84621×10<sup>-3</sup> || 9.95483×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI21S || 5. | + | | LFI21S || 5.23772×10<sup>-3</sup> || 1.93375×10<sup>-3</sup> || 9.95176×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI21M || 4. | + | | LFI21M || 4.37131×10<sup>-3</sup> || 1.70755×10<sup>-3</sup> || 9.95851×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI22S || 3. | + | | LFI22S || 3.62730×10<sup>-3</sup> || 1.20309×10<sup>-3</sup> || 9.96790×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI22M || 3. | + | | LFI22M || 3.18177×10<sup>-3</sup> || 1.13834×10<sup>-3</sup> || 9.97055×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI23S || 3. | + | | LFI23S || 3.25825×10<sup>-3</sup> || 9.10802×10<sup>-4</sup> || 9.97061×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI23M || 2. | + | | LFI23M || 2.63850×10<sup>-3</sup> || 8.05452×10<sup>-4</sup> || 9.97552×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI24S || 1. | + | | LFI24S || 1.20077×10<sup>-3</sup> || -2.98774×10<sup>-7</sup> || 9.98979×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI24M || 1. | + | | LFI24M || 1.19670×10<sup>-3</sup> || -1.35903×10<sup>-6</sup> || 9.98945×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI25S || -2. | + | | LFI25S || -2.10060×10<sup>-4</sup> || 4.42870×10<sup>-4</sup> || 9.99657×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI25M || -2. | + | | LFI25M || -2.51334×10<sup>-4</sup> || 5.58031×10<sup>-4</sup> || 9.99546×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI26S || -1. | + | | LFI26S || -1.97667×10<sup>-4</sup> || -4.22022×10<sup>-4</sup> || 9.99670×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI26M || -2. | + | | LFI26M || -2.54267×10<sup>-4</sup> || -5.74267×10<sup>-4</sup> || 9.99521×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI27S || 5. | + | | LFI27S || 5.85555×10<sup>-3</sup> || 1.81753×10<sup>-3</sup> || 9.94483×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI27M || 4. | + | | LFI27M || 4.99628×10<sup>-3</sup> || 1.57663×10<sup>-3</sup> || 9.95292×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI28S || 6. | + | | LFI28S || 6.37507×10<sup>-3</sup> || -1.96172×10<sup>-3</sup> || 9.93966×10<sup>-1</sup> |

|- | |- | ||

| − | | LFI28M || 4. | + | | LFI28M || 4.78777×10<sup>-3</sup> || -1.54429×10<sup>-3</sup> || 9.95517×10<sup>-1</sup> |

|- | |- | ||

| − | |||

|} | |} | ||

| + | <center>'''<small>Characteristic vectors for each LFI detector.</small>'''</center> | ||

| − | By using this characteristic vector the calculation of the convolved dipole is simply defined by a dot product of the vector <math> \mathbf{S} </math> | + | By using this characteristic vector the calculation of the convolved dipole is simply defined by a dot product of the vector <math> \mathbf{S} </math> and the dipole axis rotated in the beam reference frame: |

| − | :<math> \Delta T (t) = \mathbf{S}^T \ | + | :<math> \Delta T (t) = \mathbf{S}^{\rm T} {\sf U}(t) \mathbf{D}_{\rm E} </math>. |

===Binning=== | ===Binning=== | ||

| − | In order to simplify the computation and to reduce the amount of data used in the calibration procedure the data are phase binned | + | In order to simplify the computation and to reduce the amount of data used in the calibration procedure, the data are phase binned into maps with <i>N</i><sub>side</sub>=256. During phase binning all the data are neglected that are flagged for manoeuvres, planets, and gaps, as well as those flagged in Level 1 analysis as not recoverable. |

===Fit=== | ===Fit=== | ||

| − | The first order calibration values are given by a | + | The first-order calibration values are given by a least squares fit between the signal and the dipole. For each pointing gain <i>g<sub>k</sub></i> and offset <i>b<sub>k</sub></i> values are computed by minimizing |

| − | :<math> \chi^2 = \sum_{i \in k} { | + | :<math> \chi^2 = \sum_{i \in k} {\left\{ \Delta V (t_i) - \Delta V_{\rm model} (t_i|g_k, b_k)\right\}^2 \over {\rm rms}_i^2 } </math>, |

| − | + | where the sum includes only samples outside a Galactic mask. | |

===DaCapo=== | ===DaCapo=== | ||

| − | The largest source of error in the fit arises from | + | The largest source of error in the fit arises from unmodelled sky signal Δ<i>T</i><sub>a</sub> from CMB anisotropy. |

| − | + | The procedure adopted to correct this effect (DaCapo) is described below. | |

| − | The procedure adopted | ||

| − | The uncalibrated | + | The uncalibrated TOI <i>y<sub>i</sub></i> belonging to a pointing period <i>k</i> is modelled as |

| − | :<math>y_{i}=g_{k}*(s_{i}+d_{i})+b_{k}+n</math> | + | :<math>y_{i}=g_{k}*(s_{i}+d_{i})+b_{k}+n</math>, |

| − | where the unknowns < | + | where the unknowns <i>s<sub>i</sub></i>, <i>g<sub>k</sub></i>, and <i>b<sub>k</sub></i> are, respectively, the signal per OBT, the gain per PID, and the offset per PID, and where <i>d<sub>i</sub></i> is the total dipole. |

| − | This is a | + | This is a nonlinear χ<sup>2</sup> minimization problem. It can be linearized using an iterative procedure: for each iteration step a linearized model TOI is built as |

| − | :<math>y_{i,model}=g_{k}(-1)* | + | :<math>y_{i,model}=g_{k}(-1)*\left\{s_{i}-s_{i}(-1)\right\}+g_{k}*\left\{d_{i}+a_{i}(-1)\right\}+b_{k}</math>, |

| − | where < | + | where <i>g<sub>k</sub></i>(-1) and <i>s<sub>i</sub></i>(-1) are the gain and sky signal computed at the previous iteration step. |

| − | |||

| − | |||

| + | A linear fit is performed between <i>y<sub>i</sub></i> and <i>y</i><sub><i>i</i>,model</sub> to obtain the new <i>g<sub>k</sub></i> and <i>s<sub>i</sub></i> values. The procedure is iterated until convergence. | ||

To reduce the impact of the noise during the iterative procedure the sky estimation is built using data from both radiometers of the same horn. | To reduce the impact of the noise during the iterative procedure the sky estimation is built using data from both radiometers of the same horn. | ||

===Smoothing=== | ===Smoothing=== | ||

| − | To improve accuracy given by the iterative algorithm and remove noise from the solution a smoothing algorithm must be | + | To improve accuracy given by the iterative algorithm and remove noise from the solution a smoothing algorithm must be applied. |

====OSGTV==== | ====OSGTV==== | ||

| − | OSGTV is a 3 step smoothing algorithm, implemented with a C++ code. | + | OSGTV is a 3-step smoothing algorithm, implemented with a C++ code. |

The gain reconstructed with DaCapo can be expressed as | The gain reconstructed with DaCapo can be expressed as | ||

| − | :<math>G_{raw}(t,T(t))=G_{true}(t,T(t))+G_{noise}(t)</math> | + | :<math>G_{\rm raw}(t,T(t))=G_{\rm true}(t,T(t))+G_{\rm noise}(t)</math>, |

| − | where the < | + | where the <i>T</i>(<i>t</i>) function represents temperature fluctuations of the Focal Plane. |

| − | The time dependence of the | + | The time dependence of the "real" gain <i>G</i><sub>true</sub> is modelled as the superposition of a "slow" component, with a timescale of about 3 months, and a "fast" component with a timescale of a few PIDs: |

| − | :<math>G_{true}=G_{slow}+G_{fast}</math>. | + | :<math>G_{\rm true}=G_{\rm slow}+G_{\rm fast}</math>. |

| − | The slow component | + | The slow component takes into account the seasonal variations of the thermal structure of the spacecraft due to the orbital motion, while the "fast" component describes the thermal effects of the electronics and compressors, as well as single events like the sorption cooler switchover. |

| − | To disentangle the components of | + | To disentangle the components of <i>G</i><sub>true</sub>, we apply a parametric "hybrid" approach in three steps. |

| − | <b>Step 1. </b> For each PID < | + | <b>Step 1. </b> For each PID <i>i</i>, we used the 4-K total-power <i>V</i><sub>load</sub>(<i>i</i>) and the signal from temperature detectors in the focal plane <i>T<sub>i</sub></i>, subsampled at 1 sample/PID, to track gain changes. This is implemented through a linear fit between <i>V</i><sub>load</sub>(<i>i</i>) × <i>G</i><sub>raw</sub> and <i>T<sub>i</sub></i>: |

| − | :<math>V_{load}(i) | + | :<math>V_{\rm load}(i)\times G_{\rm raw}=K_{0}+K_{1} \times (T_{i}-\langle T_{\rm MW}\rangle)</math>, |

| − | where the window length | + | where the window length MW of the moving average is proportional to the variance of the dipole in the PID being considered. |

| − | The resulting gain is | + | The resulting gain is |

| − | :<math> G_{i} = \frac{ K_{0} + K_{1} | + | :<math> G_{i} = \frac{ K_{0} + K_{1} \times (T_{i} - \langle T_{\rm MW} \rangle ) }{ V_{\rm load}(i) } </math>. |

| − | <b>Step 2. </b> The "fast" component < | + | <b>Step 2. </b> The "fast" component <i>G</i><sub>fast</sub> is recovered as follows: we define a maximum moving average window length WL<sub>max</sub>,and for each PID we compute the variance σ<sup>2</sup><sub>i</sub> of <i>G<sub>i</sub></i> in this window. |

| − | We define a percentile on the ordered variance array and we compute the corresponding value of the variance < | + | We define a percentile on the ordered variance array and we compute the corresponding value of the variance σ<sup>2</sup><sub>p</sub>. The window length for each PID is then computed as |

| − | :<math> | + | :<math>{\rm WL}_{i}=\frac{\sigma_{\rm p}^2 \times {\rm WL}_{\rm max}}{\sigma_{i}^2}</math>. |

| − | If < | + | If WL<sub><i>i</i></sub> > WL<sub>max</sub> we impose WL<sub><i>i</i></sub> = WL<sub>max</sub>. With these window lengths |

| − | a | + | a moving window average is performed on <i>G<sub>i</sub></i>. |

| − | The averaged gain vector is subtracted | + | The averaged gain vector is subtracted from the raw hybrid gain to obtain <i>G</i><sub>fast</sub>. |

| − | <b>Step 3. </b> We perform a | + | <b>Step 3. </b> We perform a moving window average on <i>G<sub>i</sub></i> and we compute the variances of the smoothed array. The window length is computed with a linear interpolation between a minimum length WL<sub>min</sub> defined in the dipole minima and a maximum WL<sub>max</sub> defined in the dipole maxima. The array of gain variances is weighted with the variance of the dipole. |

| − | We set a percentile on the variance array and we find the corresponding variance value < | + | We set a percentile on the variance array and we find the corresponding variance value σ<sup>2</sup><sub>p</sub>. Around this value we search for local maxima of the variance array, and we split the domain of the gain into subsets between consecutive maxima. |

| − | For each subset we perform a | + | For each subset we perform a moving average with the corresponding window length. |

| − | The "slow" component < | + | The "slow" component <i>G</i><sub>slow</sub> is given by the union of these subsets. |

| − | ===Gain | + | ===Gain application=== |

| − | The last step in TOI processing is the creation of the calibrated stream. For each sample we have | + | The last step in TOI processing is the creation of the calibrated stream. For each sample we have |

| − | :<math> | + | :<math> {\rm TOI}_{\rm cal}(t) = ({\rm TOI}_{\rm diff}(t) – {\rm offset}(k)) \, g(k) \, - \, {\rm convDip}(t)\, - \, T_{\rm stray}(t)</math>, |

| − | where t is the time and k is the pointing period, | + | where <i>t</i> is the time and <i>k</i> is the pointing period, "convDip" is the CMB dipole convolved with the beam, and <i>T</i><sub>stray</sub> is the stray light. |

== Noise == | == Noise == | ||

| − | + | The aim of this pipeline step is the reconstruction of the noise parameters from calibrated flight TOI. The goal is two-fold. On the one hand we need to know the actual noise properties of our instrument in order to properly take them into account, especially during later processing and analysis steps, such as map-making and power spectrum estimation. On the other hand, evaluation of noise properties during the instrument lifetime is a way to track down possible variations, anomalies, and general deviations from the expected behaviour. | |

| − | our instrument in order to properly take them into account especially during | ||

| − | |||

=== Operations === | === Operations === | ||

| − | Noise estimation is performed on calibrated data | + | Noise estimation is performed on calibrated data; since we would like to track possible noise variations through the mission lifetime, we |

| − | select data in chunks of | + | select data in chunks of five ODs (Operational Days). These data are processed by the ROMA iterative generalized least-squares (IGLS) |

| − | + | mapmaking algorithm, which includes a noise estimation tool. In general IGLS mapmaking is a quite costly in terms of time and | |

| − | resources required. However the length of the data is such that | + | resources required. However, the length of the data is such that it can run on the DPC cluster in a very short time (around 1-2 minutes). |

The method implemented can be summerized as follows. We model the calibrated TOI as | The method implemented can be summerized as follows. We model the calibrated TOI as | ||

| − | :<math> \mathbf{\Delta T} = \ | + | :<math> \mathbf{\Delta T} = {\sf P} \mathbf{m} + \mathbf{n} </math>, |

| − | where < | + | where <b>n</b> is the noise vector and P is the pointing matrix that links a pixel in the map <b>m</b> |

| − | with a sample in the TOI < | + | with a sample in the TOI <b>d</b>. The initial estimation of the signal is obtained by simply rebinning the TOI into a map. |

Then an iterative approach follows in which both signal and noise are estimated according to | Then an iterative approach follows in which both signal and noise are estimated according to | ||

| − | :<math> \mathbf{\hat{n}_i | + | :<math> \mathbf{\hat{n}}_i = \mathbf{\Delta T} - {\sf P}\mathbf{\hat{m}}_i </math> |

| − | : <math> \mathbf{\hat{m}_{i+1 | + | :<math> \mathbf{\hat{m}}_{i+1} = \left({\sf P}^{\rm T}\hat{\sf N}_i^{-1}{\sf P}\right)^{-1}{\sf P}^{\rm T}\hat{\sf N}_i^{-1}\Delta T </math>, |

| − | where < | + | where N<sub>i</sub> is the noise covariance matrix in the time domain resulting from iteration <i>i</i>. We find that convergence is |

| − | achieved. | + | achieved after three iterations. |

| − | We then perform an FFT (Fast Fourier Transform) on the noise | + | We then perform an FFT (Fast Fourier Transform) on the noise timestream output from from the iterative approach, and fit the resulting spectrum. |

| − | === Fitting | + | === Fitting pipeline === |

| − | As already done in the 2013 release, we estimate noise properties | + | As already done in the 2013 and 2015 release, we estimate the parameters of the noise properties (i.e., white noise level, knee-frequency, and slope of the |

| − | low-frequency noise component) by means of | + | low-frequency noise component) by means of an MCMC approach. Therefore for the spectra just described we first compute the white noise level by taking the mean of the last 10% of the data (at 30 GHz due to the higher value of the |

| − | + | knee-frequency this quantity is reduced to 5%). Once the white noise level has been determined we proceed with the actual fitting | |

| − | knee-frequency this quantity is reduced to 5%). Once white noise level has been determined we proceed with the actual fitting | + | of the knee-frequency and slope. The resulting values reported are the medians of the fitted values for our 5-OD chunks, through the |

| − | of knee-frequency and slope. The resulting values reported | ||

whole mission lifetime. | whole mission lifetime. | ||

| Line 444: | Line 424: | ||

|- | |- | ||

! Horn | ! Horn | ||

| − | ! White | + | ! White noise M [<math>\mu</math>K s<math>^{1/2}</math>] |

| − | ! White | + | ! White noise S [<math>\mu</math>K s<math>^{1/2}</math>] |

! Knee-frequency M [mHz] | ! Knee-frequency M [mHz] | ||

! Knee-frequency S [mHz] | ! Knee-frequency S [mHz] | ||

| Line 451: | Line 431: | ||

! Slope S | ! Slope S | ||

|- | |- | ||

| − | | 18 || | + | | 18 || 512.5±2.0 || 466.7±2.3 || 14.8±2.1 || 17.8±1.5 || -1.06±0.08 || -1.18±0.10 |

|- | |- | ||

| − | | 19 || | + | | 19 || 578.9±2.1 || 554.2±2.2 || 11.7±1.1 || 13.7±1.3 || -1.21±0.23 || -1.10±0.13 |

|- | |- | ||

| − | | 20 || | + | | 20 || 586.9±1.9 || 620.0±2.7 || 7.9±1.5 || 5.6±1.0 || -1.19±0.27 || -1.30±0.33 |

|- | |- | ||

| − | | 21 || | + | | 21 || 450.4±1.4 || 559.8±1.8 || 37.9±5.7 ||13.1±1.3 || -1.24±0.08 ||-1.20±0.08 |

|- | |- | ||

| − | | 22 || 490. | + | | 22 || 490.1±1.3 || 530.9±2.2 || 9.5±1.7 ||14.3±6.7 || -1.41±0.25 || -1.23±0.27 |

|- | |- | ||

| − | | 23 || | + | | 23 || 503.9±1.6 || 539.1±1.7 || 29.6±1.1 ||58.9±1.6 || -1.07±0.02 || -1.21±0.02 |

|- | |- | ||

| − | | 24 || | + | | 24 || 462.8±1.3 || 400.5±1.1 || 26.9±1.0 ||89.6±13.8 || -0.94±0.01 || -0.91±0.01 |

|- | |- | ||

| − | | 25 || 415. | + | | 25 || 415.2±1.3 || 395.0±3.1 || 19.7±1.0 ||46.8±1.9 || -0.85±0.01 || -0.90±0.01 |

|- | |- | ||

| − | | 26 || | + | | 26 || 482.6±1.9 || 422.9±2.5 || 64.4±1.8 ||70.7±18.7 || -0.92±0.01 || -0.75±0.07 |

|- | |- | ||

| − | | 27 || 281.5 | + | | 27 || 281.5±2.0 || 302.8±1.8 || 173.7±3.1 ||109.6±2.5 ||-0.93±0.01|| -0.91±0.01 |

|- | |- | ||

| − | | 28 || 317. | + | | 28 || 317.9±2.4 || 286.1±2.1 || 128.5±10.9 ||44.1±2.2 ||-0.93±0.01|| -0.90±0.02 |

|- | |- | ||

|} | |} | ||

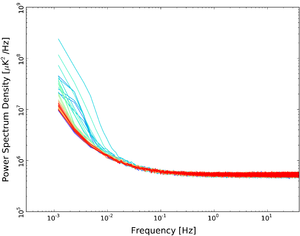

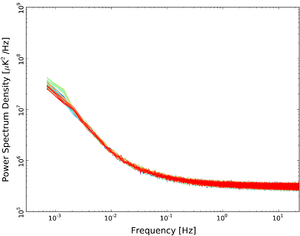

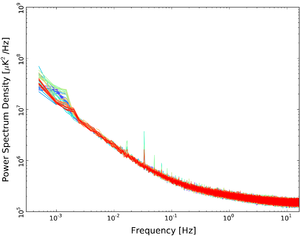

| − | Time variations of noise parameters are a good tracer of possible | + | Time variations of noise parameters are a good tracer of possible modifications in the instrument behaviour and we know that |

| − | some events capable | + | some events capable of affecting instrument behaviour happened during the mission. Variations of the physical temperature |

| − | of the instrument due to the transition in the operations from the first sorption | + | of the instrument due to the transition in the operations from the first sorption cooler to the second one, as well as the |

| − | observed degradation in the performances of the first cooler are events that clearly show their | + | observed degradation in the performances of the first cooler, are events that clearly show their imprints in the |

variation of the noise spectra. | variation of the noise spectra. | ||

| − | In the following figure we report a representative sample of noise spectra, one for each frequency channel (from left to right | + | In the following figure we report a representative sample of noise spectra, one for each frequency channel (from left to right LFI 18M, LFI 25S, and LFI 27M), covering |

| − | the whole mission lifetime. | + | the whole mission lifetime. The white noise is extremely stable at the level of 0.1%. As already noted in the |

| − | 2013 release, knee- | + | 2013 and 2015 release, knee-frequencies and slopes are stable until OD 326 and show clear variations and deviations from the |

| − | simple one knee-frequency one slope model. This is a sign of the degradation of the first cooler inducing thermal variations | + | simple one knee-frequency and one slope model. This is a sign of the degradation of the first cooler inducing thermal variations, |

| − | with a characteristic knee-frequency (different from the radiometric one) and with a | + | with a characteristic knee-frequency (different from the radiometric one) and with a steeper slope. Once the |

| − | second cooler | + | second cooler became operational and performed as expected, the noise spectra gradually returned to the original |

| − | shape at the beginning of the mission. This behaviour is visible although not identical at each frequency | + | shape at the beginning of the mission. This behaviour is visible, although not identical, at each frequency for |

| − | several reasons e.g. intrinsic thermal susceptibility and position on the focal plane that determines the | + | several reasons e.g., intrinsic thermal susceptibility and position on the focal plane that determines the |

actual thermal transfer function. | actual thermal transfer function. | ||

| + | [[File:noise_spectra_LFI18M_DX12_legend_LOW.png|300px|'"'LFI 18M.'"']] | ||

| + | [[File:noise_spectra_LFI25S_DX12_legend_LOW.png|300px |'"'LFI 25S.'"']] | ||

| + | [[File:noise_spectra_LFI27M_DX12_legend_LOW.png|300px|'"'LFI 27M.'"']] | ||

| − | + | <center><small>'''Noise power spectra for selected LFI radiometers.'''</small></center> | |

| − | |||

| − | |||

| − | |||

== References == | == References == | ||

Latest revision as of 16:09, 4 July 2018

Contents

Overview[edit]

The LFI Level 2 Pipeline analyses data from each horn of the instrument separately, one pointing period at a time, and stores the results in an object the length of an OD. Each diode of the horn is corrected for systematic effects. Next, measurements of the sky and the 4-K load are differenced, then the signals from one diode are combined with signals from the complementary diode in the same radiometer. Finally, photometric calibration is applied for each horn.

Pre-processing[edit]

Before the Level 2 pipeline is run, the Mission information and data sampling divisions are stored in the database, in order to improve the analysis.

The Mission information is a set of objects, one for each Operational Day (OD, as defined in the glossary), in which are stored pointing period data: the DPC pointing ID (where 1 is the first pointing of the nominal mission); PSO pointing ID; start OBT of the pointing manoeuvre; start OBT of the stable pointing period; OBT of the end of the stable pointing period; and spin axis ecliptic longitude and latitude.

The sampling information is a set of objects, one for each LFI frequency, in which are stored for each pointing ID: the start OBT of the pointing manoeuvre; th start OBT of the stable pointing period; the end OBT of the pointing period; the number of samples of the pointing, number of stable samples of the pointing; the starting sample of the stable pointing period; and the sample number from the start of the nominal mission. Valid samples and OBTs are defined to be those where any of the radiometers in a frequency band contain valid data.

ADC correction[edit]

During the analysis of LFI date, a systematic effect was discovered that was common to both white noise and calibration. This turned out to be a nonlinearity in the analogue-to-digital converter (ADC) on board, as discussed further in Planck-2013-II[1], Planck-2013-III[2], Planck-2015-A03[3], Planck-2015-A04[4] and Planck-2020-A2[5].

Evaluation[edit]

The mathematical model represents the digital ADC output as:

- ,

where V is the voltage input, γ is the DAE gain, Δ is the DAE offset and x0 is the DAE Tzero.

We can model the non-linearity as a function of the input voltage R(V). So we have the apparent inferred voltage V' and we can link it to the actual input voltage with:

- ,

so that

- .

Since V >> Δ and V γ >> x0, we can use the much simpler relation

- ,

and we expect this to be very near to unity for all V.

To find the response curve we have only the apparent voltage to work with, so we had to use the inverse response function R'(V') and replace the real input voltage with Tsys times the time varying gain factor G(t):

- .

If we introduce a small signal on top of Tsys, which leads to increased detected voltage and corresponding apparent voltage increment, then

- ,

so carrying out the differentiation with respect to V' of the relation between true and apparent signal voltage leads to

- .

We now assume Tsys and δT are fixed and that the variations are due to slow drifts in the gain. Then we can isolate the terms

- ,

- .

Combining the equations to remove the gain factor, we have

- .

Rearranging and setting a = δT/Tsys gives

- .

We see the expected direct proportionality of δV' to V', based on the assumption that the variations in voltage are due to overall gain drift, so that the amplitude of voltage and signal will vary together. Then there is the additional differential term, which will pull the signal amplitude away from the linear relationship. So if we plot measured white noise or dipole gain factor against recovered voltage, we should see a linear curve with variations due to local slope changes at particular voltages. The linear part can be taken out and the differential part fitted. This was numerically integrated to obtain the inverse response curve, which is then used to convert the measured voltages to corrected voltages.

Application[edit]

In the 2015 release we fit white noise amplitudes binned with respect to detector voltage with a simple spline curve and we translate results into a correction curve as described in Planck-2015-A03[3]. Instead, the fit now relies on a more physically motivated function based on voltage steps (see Planck-2020-A2[5] for futher details). We compared the results between this method and the previous one by means of null-maps, checking the consistency of the resulting new gain solution with the new ADC correction applied. This was done by computing the rms scatter from the 8 different survey maps taking into account pixel hits and zero levels. A ``goodness" parameter can then be derived from the mean level of the masked null-map made between these survey scatter maps. The 70 and 44 GHz null-maps failed to show significant improvements but this was not the case at 30 GHz. Inspecting the ADC solutions it became clear that the higher noise per radiometer and low ADC non-linearity at 70 GHz did not allow for a good fit. Meanwhile the ADC effect was so large at 44 GHz that new model could not reproduce some of the details, and so led to some small residuals. The 30 GHz system has much lower noise and less thermal drift in the gain, meaning that more voltage levels were revisited more often thus yielding a more consistent ADC model curve. It was therefore decided to keep this new solution only for the 30 GHz channels. The other two channels thus have the same correction for ADC non-linearity as in the previous release.

Spike removal[edit]

Some of the LFI receivers exhibit a small artefact with exactly 1-s period, that produces effects visible in the power spectra. The effect is a set of spikes at 1 Hz and harmonics. The spurious signal can be well modelled and is removed from the timelines. More information can be found in Planck-2013-II[1], Planck-2013-III[2], Planck-2015-A03[3], Planck-2015-A04[4] and Planck-2020-A2[5].

Modelling[edit]

The cause of the spikes at 1 Hz and harmonics is a tiny 1-s square-wave embedded in signals from the affected channels. The method used to estimate the 1-Hz signal is to build a template in the time domain, synchronized with the spurious signal. The first step is to divide each second of data into time bins using OBT. The number of bins is computed using

where fsamp is the sampling frequency and is 136 Hz at 70 GHz, 80 at 44 GHz, and 56 at 30 GHz. Then the vector of bins is initialized with time intervals. To avoid aliasing effects, the template resolution is √3. We can write the process adding indices to the time sample; here a lower index denotes the particular time sample, while the upper index labels the bin into which the sample falls. The linear filter can be written as

- .

Here Δx(ti) is the filter weight, which is determined by where the sample lies within the bin. If we use tj with only an upper index to denote the start of each bin, then we can write the filter weight as

- .

In other words, the filter weight is the time sample value minus the start of the bin divided by the width of the bin.

We must estimate the parameters aj from the data. With the assumption that the instrument has stable noise properties, we can use a least squares algorithm to estimate the bin values:

- .

This can be represented in the matrix equation

- ,

with the following definitions:

- ;

- ;

- ;

- ;

- .

With these definitions we have to make use of periodic boundary conditions to obtain the correct results, such that if k = 0, then k-1 = n-1 and if k = n-1, then k+1 = 0. Once this is done, we have a symmetric tridiagonal matrix with additional values at the upper right and lower left corners. The matrix can be solved with LU decomposition. In order to be certain of the numerical accuracy of the result, we can perform a simple iteration. The solving of the linear system and the iterative improvement of the solution are implemented as suggested in Numerical Recipes (Press et al., Cambridge University Press, 2007).

Application[edit]

For each of the 44 LFI diodes there is a corresponding object in the Database. After studying the amplitude of the spikes at other LFI frequencies, we chose to apply the correction only to the 44 GHz radiometers. Each object contains three columns: the bins start time vector; the sky amplitudes; and the reference amplitudes.

For each sample the value to be subtracted is computed using

- ,

where k is the index of the bins at a given time.

Filling gaps in the data[edit]

During the mission, a small number of data packets were lost (Planck-2013-II[1], Planck-2015-A03[3] and Planck-2020-A2[5]). Moreover, in two different and very peculiar situations, LFI was shut down and restarted, giving inconsistencies in data sampling. None of these data are used for scientific purposes, but to avoid discrepancies in data analysis all of the radiometers at the same frequency must have the same number of samples.

To ensure this, we compare the length of the data stream to be reduced in a specific pointing period to the data stored in the sample information object. If the length is not the same, the OBT vector is filled with missing sample times, the data vector is filled with zeros, and in the flag column the bit for "gap" is entered.

Gain modulation factor[edit]

The pseudo-correlation design of the LFI radiometers allows for a dramatic reduction of 1/f noise when the Vsky and Vload outputs are differenced (see LFI instrument description). The two streams are slightly unbalanced, since one looks at the 2.7-K sky and the other looks at the 4.5-K reference load. To force the mean of the difference to zero, the load signal is multiplied by the gain modulation factor (R). For each pointing period this factor is computed using (see equation (3) in LFI description)

- .

Then the data are differenced using

- .

This value for R minimizes both the 1/f noise and the white noise in the difference timestream. The i index represents the diode and can be 0 or 1.

At this point, we also set the manoeuvre flag bit to identify those samples that have missing data, using the information stored in the sampling information object. This identifies which data to ignore in the next step of the Pipeline.

The R values are stored in the database. At the same time the mean values of Vsky and Vload are stored, so they can be used in other steps of the analysis.

Diode combination[edit]

The two complementary diodes of each radiometer are combined. The relative weights of the diodes in the combination are chosen for optimal noise. We assign relative weights to the uncalibrated diode streams based on their first-order calibrated noise.

Evaluation[edit]

From first-order calibration we compute an absolute gain G0 and G1, subtract an estimated sky signal and calculate the calibrated white noise σ0 and σ1, for the pair of diodes (see equation 6 in LFI description). The weights for the two diodes (i = 0 or 1) are

- ,

where the weighted calibration constant is given by

- .

The weights are fixed to a single value per diode for the entire data set. Small variations in the relative noise of the diodes would in principle require that we recalculate the weights on shorter timescales; however, we decided that a time-varying weight could possibly induce more significant (and hard to model) systematics, so we chose a single best estimate for the weights for each diode pair.

- Weights used in combining diodes.

Application[edit]

The weights in the table above are used in the formula

- .

Planet flagging[edit]

Extraction method[edit]

Measurements of planets have been formed from samples containing flux from the planet, plus a surrounding region, projected onto a grid (microstripes), by assuming an elliptical Gaussian beam using parameters from instrument database.

Microstripes are a way to extract and store relevant samples for planet detection. Here relevant samples are those affected by the planet plus samples in the neighbourhood (to establish a background level). The search radius for selecting samples as relevant is 5° around the planet's position, computed at the pointing period mid-time. For each sample we store SCET (Spacecraft Event Time), pointing directions, and calibrated temperature. Destriping is applied during the application of this procedure.

Random errors are estimated by taking the variance of samples entering each micromap pixel. This method is fast but not exact, since the variance is larger near a bright source. This can cause the noise to be overestimated by a factor of 2; however, given the large S/N for planetary observations, that is not a major drawback.

The apparent position of a planet as seen from Planck at a given time is derived from JPL Horizons. Positions are tabulated in steps of 15 minutes and then linearly interpolated at the sampling frequency of each detector. JPL Horizons tables allow us also to derive other quantities such as the planet-Planck distance and the planet-Sun distance, as well as the planet angular diameter, which affects the apparent brightness of the planet.

The antenna temperature is a function of the dilution factor, according to

- ,

where Tant,obs and Tant,1 are the observed and reduced Tant, θ is the instantaneous angular diameter of the planet, and bFWHM the beam full-width at half-maximum.

With the above definition Tant,1 could be considered as the Tant for a planet with bFWHM = θ, but a more convenient view is to take a reference dilution factor D0, as the dilution factor for a standardized angular diameter for the planet and fiducial FWHM beam bFWHM, θ0, to have

- ,

leading to the following definition of a standardized Tant:

- .

This has the advantage of removing variations among different detectors and transits, while keeping the value of Tant similar to that seen by the instrument and then allowing a prompt comparison of signals and sensitivities.

Application[edit]

The OBT vectors found by the searches are saved in a set of objects, one for each horn. In the Level 2 pipeline those OBTs are compared with the OBT vector of the data to set the planet bit flag where needed.

Photometric calibration[edit]

Photometric calibration is the procedure used to convert data from volts to kelvins. The source of the calibration is the well understood CMB dipole, caused by the motion of the Solar System with respect to the CMB reference frame. To this signal we add the modulation induced by the orbital motion of Planck around the Sun. The resulting signal is then convoluted with the horn beam to obtain the observed dipole. For details refer to Planck-2015-A06[6] and Planck-2020-A2[5].

In the 2018 release we decided to include the sky signal in the calibration process, on the same footing as the orbital and Solar dipole, including both temperature and polarization fluctuations. Unfortunately, this is non-trivial, since the purpose of the experiment, in this case, is precisely to measure the polarized emission from the sky. A good approximation, however, can be estalished through an iterative process that alternates between gain calibration, map making and astrophysical component separation:

- Let T(sky) be the full best-fit Planck2015 astrophysical sky model, including CMB, synchrotron, free-free, thermal and spinning dust and CO emission for temperature maps, and CMB, synchrotron and thermal dust in polarization.

- Estimate G explicitly including the temperature and polarization component of T(sky) in the calibrator on the same footing as teh orbital and Solar Dipole.

- Compute frequency maps with these new gains.

- Estimate new astrophysical model from updated frequency maps using Commander. (At the moment the sky model is adjusted only for LFI frequencies.)

- Iterate (2)--(4).

Since the true sky signal is stationary on the sky, while the spurious gain fluctuations are not, this process will in principle converge, essentially corresponding to a generalized map-maker in which the G is estimated jointly with the sky maps (see Planck-2020-A2[5] for further details).

Beam-convolved dipole[edit]

In computing the beam-convolved dipole we used an elegant algorithm to save time and computing power. In computing the cosmological dipole signal it is common to assume a pencil-like beam acting as a Dirac delta function. In this case a dipole timeline is defined as

where PE(t) is the pointing direction in the observer reference frame and DE(t) is the dipole axis scaled by the dipole amplitude, again in the same reference frame (here the label "E" is for "Ecliptic").

In general the true signal would have to be convolved with the beam pattern of the given radiometer, usually described as a fixed map in the beam reference frame or as a time-dependent map in the observer reference frame. In this case it is easiest to describe the convolution in the beam reference frame, since the function to be convolved then can be described by a single vector.

Denoting by the matrix converting from the observer to the beam reference frame, so that

- ,

the instantaneous dipole direction in the beam reference frame is

- .

By denoting with a pointing direction in the beam reference frame, then

- ,

where N is a normalization constant defined through

- .

Denoting with Px, Py, and Pz the three Cartesian components of P, the integral of the dot product can be decomposed into three independent integrals:

- ;

- ;

- .

These integrals define a time independent vector characteristic of each radiometer and constant over the mission.

| Detector ID | |||

|---|---|---|---|

| LFI18S | 4.64653×10-3 | -1.32712×10-3 | 9.95771×10-1 |

| LFI18M | 2.84449×10-3 | -8.95117×10-4 | 9.97353×10-1 |

| LFI19S | 4.29488×10-3 | -1.40378×10-3 | 9.96128×10-1 |

| LFI19M | 4.50970×10-3 | -1.62551×10-3 | 9.95733×10-1 |

| LFI20S | 5.27978×10-3 | -1.94793×10-3 | 9.95146×10-1 |

| LFI20M | 4.74206×10-3 | -1.84621×10-3 | 9.95483×10-1 |

| LFI21S | 5.23772×10-3 | 1.93375×10-3 | 9.95176×10-1 |

| LFI21M | 4.37131×10-3 | 1.70755×10-3 | 9.95851×10-1 |

| LFI22S | 3.62730×10-3 | 1.20309×10-3 | 9.96790×10-1 |

| LFI22M | 3.18177×10-3 | 1.13834×10-3 | 9.97055×10-1 |

| LFI23S | 3.25825×10-3 | 9.10802×10-4 | 9.97061×10-1 |

| LFI23M | 2.63850×10-3 | 8.05452×10-4 | 9.97552×10-1 |

| LFI24S | 1.20077×10-3 | -2.98774×10-7 | 9.98979×10-1 |

| LFI24M | 1.19670×10-3 | -1.35903×10-6 | 9.98945×10-1 |

| LFI25S | -2.10060×10-4 | 4.42870×10-4 | 9.99657×10-1 |

| LFI25M | -2.51334×10-4 | 5.58031×10-4 | 9.99546×10-1 |

| LFI26S | -1.97667×10-4 | -4.22022×10-4 | 9.99670×10-1 |

| LFI26M | -2.54267×10-4 | -5.74267×10-4 | 9.99521×10-1 |

| LFI27S | 5.85555×10-3 | 1.81753×10-3 | 9.94483×10-1 |

| LFI27M | 4.99628×10-3 | 1.57663×10-3 | 9.95292×10-1 |

| LFI28S | 6.37507×10-3 | -1.96172×10-3 | 9.93966×10-1 |

| LFI28M | 4.78777×10-3 | -1.54429×10-3 | 9.95517×10-1 |

By using this characteristic vector the calculation of the convolved dipole is simply defined by a dot product of the vector and the dipole axis rotated in the beam reference frame:

- .

Binning[edit]

In order to simplify the computation and to reduce the amount of data used in the calibration procedure, the data are phase binned into maps with Nside=256. During phase binning all the data are neglected that are flagged for manoeuvres, planets, and gaps, as well as those flagged in Level 1 analysis as not recoverable.

Fit[edit]

The first-order calibration values are given by a least squares fit between the signal and the dipole. For each pointing gain gk and offset bk values are computed by minimizing

- ,

where the sum includes only samples outside a Galactic mask.

DaCapo[edit]

The largest source of error in the fit arises from unmodelled sky signal ΔTa from CMB anisotropy. The procedure adopted to correct this effect (DaCapo) is described below.

The uncalibrated TOI yi belonging to a pointing period k is modelled as

- ,

where the unknowns si, gk, and bk are, respectively, the signal per OBT, the gain per PID, and the offset per PID, and where di is the total dipole.

This is a nonlinear χ2 minimization problem. It can be linearized using an iterative procedure: for each iteration step a linearized model TOI is built as

- ,

where gk(-1) and si(-1) are the gain and sky signal computed at the previous iteration step.

A linear fit is performed between yi and yi,model to obtain the new gk and si values. The procedure is iterated until convergence. To reduce the impact of the noise during the iterative procedure the sky estimation is built using data from both radiometers of the same horn.

Smoothing[edit]

To improve accuracy given by the iterative algorithm and remove noise from the solution a smoothing algorithm must be applied.

OSGTV[edit]

OSGTV is a 3-step smoothing algorithm, implemented with a C++ code.

The gain reconstructed with DaCapo can be expressed as

- ,

where the T(t) function represents temperature fluctuations of the Focal Plane. The time dependence of the "real" gain Gtrue is modelled as the superposition of a "slow" component, with a timescale of about 3 months, and a "fast" component with a timescale of a few PIDs:

- .

The slow component takes into account the seasonal variations of the thermal structure of the spacecraft due to the orbital motion, while the "fast" component describes the thermal effects of the electronics and compressors, as well as single events like the sorption cooler switchover.

To disentangle the components of Gtrue, we apply a parametric "hybrid" approach in three steps.

Step 1. For each PID i, we used the 4-K total-power Vload(i) and the signal from temperature detectors in the focal plane Ti, subsampled at 1 sample/PID, to track gain changes. This is implemented through a linear fit between Vload(i) × Graw and Ti:

- ,

where the window length MW of the moving average is proportional to the variance of the dipole in the PID being considered. The resulting gain is

- .

Step 2. The "fast" component Gfast is recovered as follows: we define a maximum moving average window length WLmax,and for each PID we compute the variance σ2i of Gi in this window. We define a percentile on the ordered variance array and we compute the corresponding value of the variance σ2p. The window length for each PID is then computed as

- .

If WLi > WLmax we impose WLi = WLmax. With these window lengths a moving window average is performed on Gi. The averaged gain vector is subtracted from the raw hybrid gain to obtain Gfast.

Step 3. We perform a moving window average on Gi and we compute the variances of the smoothed array. The window length is computed with a linear interpolation between a minimum length WLmin defined in the dipole minima and a maximum WLmax defined in the dipole maxima. The array of gain variances is weighted with the variance of the dipole. We set a percentile on the variance array and we find the corresponding variance value σ2p. Around this value we search for local maxima of the variance array, and we split the domain of the gain into subsets between consecutive maxima. For each subset we perform a moving average with the corresponding window length. The "slow" component Gslow is given by the union of these subsets.

Gain application[edit]

The last step in TOI processing is the creation of the calibrated stream. For each sample we have

- ,

where t is the time and k is the pointing period, "convDip" is the CMB dipole convolved with the beam, and Tstray is the stray light.

Noise[edit]

The aim of this pipeline step is the reconstruction of the noise parameters from calibrated flight TOI. The goal is two-fold. On the one hand we need to know the actual noise properties of our instrument in order to properly take them into account, especially during later processing and analysis steps, such as map-making and power spectrum estimation. On the other hand, evaluation of noise properties during the instrument lifetime is a way to track down possible variations, anomalies, and general deviations from the expected behaviour.

Operations[edit]

Noise estimation is performed on calibrated data; since we would like to track possible noise variations through the mission lifetime, we select data in chunks of five ODs (Operational Days). These data are processed by the ROMA iterative generalized least-squares (IGLS) mapmaking algorithm, which includes a noise estimation tool. In general IGLS mapmaking is a quite costly in terms of time and resources required. However, the length of the data is such that it can run on the DPC cluster in a very short time (around 1-2 minutes).

The method implemented can be summerized as follows. We model the calibrated TOI as

- ,

where n is the noise vector and P is the pointing matrix that links a pixel in the map m with a sample in the TOI d. The initial estimation of the signal is obtained by simply rebinning the TOI into a map. Then an iterative approach follows in which both signal and noise are estimated according to

- ,

where Ni is the noise covariance matrix in the time domain resulting from iteration i. We find that convergence is achieved after three iterations.

We then perform an FFT (Fast Fourier Transform) on the noise timestream output from from the iterative approach, and fit the resulting spectrum.

Fitting pipeline[edit]

As already done in the 2013 and 2015 release, we estimate the parameters of the noise properties (i.e., white noise level, knee-frequency, and slope of the low-frequency noise component) by means of an MCMC approach. Therefore for the spectra just described we first compute the white noise level by taking the mean of the last 10% of the data (at 30 GHz due to the higher value of the knee-frequency this quantity is reduced to 5%). Once the white noise level has been determined we proceed with the actual fitting of the knee-frequency and slope. The resulting values reported are the medians of the fitted values for our 5-OD chunks, through the whole mission lifetime.

| Horn | White noise M [K s] | White noise S [K s] | Knee-frequency M [mHz] | Knee-frequency S [mHz] | Slope M | Slope S |

|---|---|---|---|---|---|---|

| 18 | 512.5±2.0 | 466.7±2.3 | 14.8±2.1 | 17.8±1.5 | -1.06±0.08 | -1.18±0.10 |

| 19 | 578.9±2.1 | 554.2±2.2 | 11.7±1.1 | 13.7±1.3 | -1.21±0.23 | -1.10±0.13 |

| 20 | 586.9±1.9 | 620.0±2.7 | 7.9±1.5 | 5.6±1.0 | -1.19±0.27 | -1.30±0.33 |

| 21 | 450.4±1.4 | 559.8±1.8 | 37.9±5.7 | 13.1±1.3 | -1.24±0.08 | -1.20±0.08 |

| 22 | 490.1±1.3 | 530.9±2.2 | 9.5±1.7 | 14.3±6.7 | -1.41±0.25 | -1.23±0.27 |

| 23 | 503.9±1.6 | 539.1±1.7 | 29.6±1.1 | 58.9±1.6 | -1.07±0.02 | -1.21±0.02 |

| 24 | 462.8±1.3 | 400.5±1.1 | 26.9±1.0 | 89.6±13.8 | -0.94±0.01 | -0.91±0.01 |

| 25 | 415.2±1.3 | 395.0±3.1 | 19.7±1.0 | 46.8±1.9 | -0.85±0.01 | -0.90±0.01 |

| 26 | 482.6±1.9 | 422.9±2.5 | 64.4±1.8 | 70.7±18.7 | -0.92±0.01 | -0.75±0.07 |

| 27 | 281.5±2.0 | 302.8±1.8 | 173.7±3.1 | 109.6±2.5 | -0.93±0.01 | -0.91±0.01 |

| 28 | 317.9±2.4 | 286.1±2.1 | 128.5±10.9 | 44.1±2.2 | -0.93±0.01 | -0.90±0.02 |