Difference between revisions of "HFI data compression"

| Line 4: | Line 4: | ||

The output of the readout electronics unit (REU) consists of one | The output of the readout electronics unit (REU) consists of one | ||

| − | value for each of the 72 science channels (bolometers and thermometers) for each modulation half-period. This number, <i>S<sub>REU</sub | + | value for each of the 72 science channels (bolometers and thermometers) for each modulation half-period. This number, <i>S</i><sub>REU</sub>, is the sum of the 40 16-bit ADC signal values measured within the given |

half-period. The data processor unit (DPU) performs a lossy | half-period. The data processor unit (DPU) performs a lossy | ||

| − | quantization of <i>S<sub>REU</sub | + | quantization of <i>S</i><sub>REU</sub>. |

| − | We define a compression slice of 254 <i>S<sub>REU</sub | + | We define a compression slice of 254 <i>S</i><sub>REU</sub> values, corresponding |

to about 1.4 s of observation for each detector and to a | to about 1.4 s of observation for each detector and to a | ||

| − | strip on the sky about 8° long. The mean <<i>S<sub>REU</sub | + | strip on the sky about 8° long. The mean <<i>S</i><sub>REU</sub>> of the data within |

each compression slice is computed, and data are demodulated | each compression slice is computed, and data are demodulated | ||

using this mean: | using this mean: | ||

| − | <math>S_{demod,i} = (S_{REU,i} - \langle S_{REU} \rangle) \ast(-1)^{i}</math>, | + | <math>S_{{\rm demod},i} = (S_{{\rm REU},i} - \langle S_{\rm REU} \rangle) \ast(-1)^{i}</math>, |

where 1<<i>i</i><254 is the running index within the compression slice. | where 1<<i>i</i><254 is the running index within the compression slice. | ||

| − | The mean < | + | The mean <<i>S</i><sub>demod</sub>> of the demodulated data <i>S</i><sub>demod,<i>i</i></sub> |

| − | is computed and subtracted, and the resulting slice data | + | is computed and subtracted, and the resulting slice data are quantized |

| − | according to a step size Q that is fixed per detector: | + | according to a step size <i>Q</i> that is fixed per detector: |

| − | <math> S_{DPU,i} = \mbox{round} \left[( S_{demod,i} - \langle S_{demod} \rangle) /Q \right ] </math> | + | <math> S_{DPU,i} = \mbox{round} \left[( S_{demod,i} - \langle S_{demod} \rangle) /Q \right ] </math>. |

This is the lossy part of the algorithm: the required compression | This is the lossy part of the algorithm: the required compression | ||

| − | factor, obtained through the tuning of the quantization step Q, | + | factor, obtained through the tuning of the quantization step <i>Q</i>, |

| − | adds a noise of variance | + | adds a noise of variance approximately 2% to the data. This will be discussed below. |

| − | The two means | + | The two means |

| − | < | + | <<i>S</i><sub>REU</sub>> |

| − | and | + | and |

| − | < | + | <<i>S</i><sub>demod</sub>> |

are computed as | are computed as | ||

32-bit words and sent through the telemetry, together with the | 32-bit words and sent through the telemetry, together with the | ||

<math>S_{DPU,i}</math> values. | <math>S_{DPU,i}</math> values. | ||

| − | Variable-length encoding of the < | + | Variable-length encoding of the <i>S</i><sub>DPU<i>i</i></sub> values is |

| − | performed on board, and the inverse decoding is applied on | + | performed on board, and the inverse decoding is applied on the ground. |

| − | ground. | ||

===Performance of the data compression during the mission=== | ===Performance of the data compression during the mission=== | ||

Optimal use of the bandpass available for the downlink was obtained initially by using a value | Optimal use of the bandpass available for the downlink was obtained initially by using a value | ||

| − | of | + | of <i>Q</i> = σ/2.5 for all bolometer signals. |

After the 12th of December 2009, and only for the 857 GHz detectors, the | After the 12th of December 2009, and only for the 857 GHz detectors, the | ||

| − | value was reset to | + | value was reset to <i>Q</i> = σ/2.0 to avoid data loss |

due to exceeding the limit of the downlink rate. | due to exceeding the limit of the downlink rate. | ||

With these settings the load during the mission never exceeded the | With these settings the load during the mission never exceeded the | ||

| − | allowed | + | allowed bandpass width, as is seen on the next figure. |

[[Image:HFI_TM_bandpass.png|thumb|500px|center|Evolution of the total load during the mission for the 72 | [[Image:HFI_TM_bandpass.png|thumb|500px|center|Evolution of the total load during the mission for the 72 | ||

| Line 60: | Line 59: | ||

| − | The only parameter that enters the | + | The only parameter that enters the Planck-HFI compression algorithm is |

the size of the quantization step, in units of <math>\sigma</math>, the white | the size of the quantization step, in units of <math>\sigma</math>, the white | ||

noise standard deviation for each channel. | noise standard deviation for each channel. | ||

| − | + | This quantity was adjusted during the mission by studying the mean frequency of | |

| − | the central quantization bin [-Q/2,Q/2], < | + | the central quantization bin [-<i>Q</i>/2,<i>Q</i>/2], <i>p</i><sub>0</sub> within each compression |

slice (254 samples). | slice (254 samples). | ||

| − | For | + | For pure Gaussian noise, this frequency is related to the |

| − | step size (in units of | + | step size (in units of σ) by |

<math> | <math> | ||

| − | \hat Q =2\sqrt{2} \text{Erf}^{-1}(p_0)\simeq 2.5 p_0 | + | \hat Q =2\sqrt{2} \text{Erf}^{-1}(p_0)\simeq 2.5 p_0, |

</math> | </math> | ||

| − | where the approximation is valid | + | where the approximation is valid for <i>p</i><sub>0</sub><0.4. |

| − | In | + | In Planck, however, the channel signal is not a pure Gaussian, since |

glitches and the periodic crossing of the Galactic plane add some | glitches and the periodic crossing of the Galactic plane add some | ||

strong outliers to the distribution. | strong outliers to the distribution. | ||

| − | By using the frequency of these outliers, < | + | By using the frequency of these outliers above 5σ, <i>p</i><sub>out</sub>}, simulations show that the following formula gives a valid |

| − | |||

estimate: | estimate: | ||

<math> | <math> | ||

| − | \hat Q_\text{cor}=2.5 \frac{p_0}{1-p_\text{out}} | + | \hat Q_\text{cor}=2.5 \frac{p_0}{1-p_\text{out}}. |

</math> | </math> | ||

Revision as of 09:10, 10 December 2014

Contents

[hide]Data compression[edit]

Data compression scheme[edit]

The output of the readout electronics unit (REU) consists of one value for each of the 72 science channels (bolometers and thermometers) for each modulation half-period. This number, SREU, is the sum of the 40 16-bit ADC signal values measured within the given half-period. The data processor unit (DPU) performs a lossy quantization of SREU.

We define a compression slice of 254 SREU values, corresponding to about 1.4 s of observation for each detector and to a strip on the sky about 8° long. The mean <SREU> of the data within each compression slice is computed, and data are demodulated using this mean:

,

where 1<i<254 is the running index within the compression slice.

The mean <Sdemod> of the demodulated data Sdemod,i is computed and subtracted, and the resulting slice data are quantized according to a step size Q that is fixed per detector:

.

This is the lossy part of the algorithm: the required compression factor, obtained through the tuning of the quantization step Q, adds a noise of variance approximately 2% to the data. This will be discussed below.

The two means <SREU> and <Sdemod> are computed as 32-bit words and sent through the telemetry, together with the values. Variable-length encoding of the SDPUi values is performed on board, and the inverse decoding is applied on the ground.

Performance of the data compression during the mission[edit]

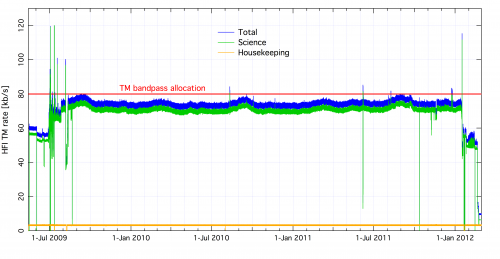

Optimal use of the bandpass available for the downlink was obtained initially by using a value of Q = σ/2.5 for all bolometer signals. After the 12th of December 2009, and only for the 857 GHz detectors, the value was reset to Q = σ/2.0 to avoid data loss due to exceeding the limit of the downlink rate. With these settings the load during the mission never exceeded the allowed bandpass width, as is seen on the next figure.

Setting the quantization step in flight[edit]

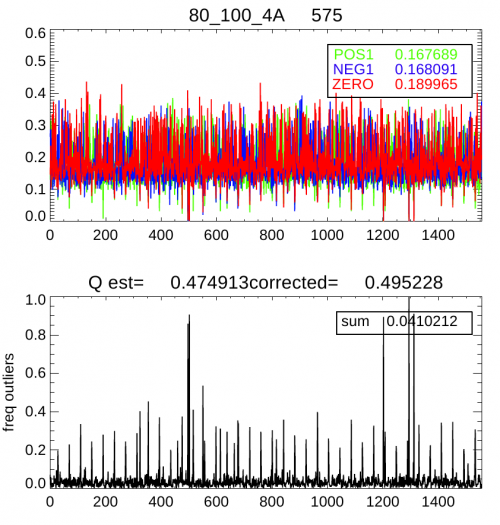

The only parameter that enters the Planck-HFI compression algorithm is the size of the quantization step, in units of , the white noise standard deviation for each channel. This quantity was adjusted during the mission by studying the mean frequency of the central quantization bin [-Q/2,Q/2], p0 within each compression slice (254 samples). For pure Gaussian noise, this frequency is related to the step size (in units of σ) by where the approximation is valid for p0<0.4. In Planck, however, the channel signal is not a pure Gaussian, since glitches and the periodic crossing of the Galactic plane add some strong outliers to the distribution. By using the frequency of these outliers above 5σ, pout}, simulations show that the following formula gives a valid estimate:

The following figure shows an example of the and timelines that were used to monitor and adjust the quantization setting.

Impact of the data compression on science[edit]

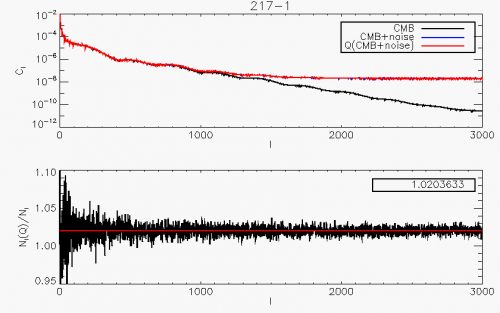

The effect of a pure quantization process of step (in units of ) on the statistical moments of a signal is well known ([1]) When the step is typically below the noise level (which is largely the PLANCK case) one can apply the Quantization Theorem which states that the process is equivalent to the addition of a uniform random noise in the range. The net effect of quantization is therefore to add quadratically to the signal a variance. For this corresponds to a noise level increase. The spectral effect of the non-linear quantization process is theoretically much more complicated and depends on the signal and noise details. As a rule of thumb, a pure quantization adds some auto-correlation function that is suppressed by a factor [2]. Note however that PLANCK does not perform a pure quantization process. A baseline which depends on the data (mean of each compression slice value), is subtracted. Furthermore, for the science data, circles on the sky are coadded. Coaddition is again performed when projecting the rings onto the sky (map-making). To study the full effect of the PLANCK-HFI data compression algorithm on our main science products, we have simulated a realistic data timeline corresponding to the observation of a pure CMB sky. The compressed/decompressed signal was then back-projected onto the sky using the PLANCK scanning strategy. The two maps were analyzed using the \texttt{anafast} Healpix procedure and both reconstructed were compared. The result is shown for a quantization step .

It is remarkable that the full procedure of

baseline-subtraction+quantization+ring-making+map-making still leads to the increase of the

variance that is predicted by the simple timeline quantization (for ).

Furthermore we check that the noise added by the compression algorithm is white.

It is not expected that the compression brings any non-gaussianity, since the pure quantization process does not add any skewness and less than 0.001 kurtosis, and coaddition of circles and then rings erases any non-gaussian contribution according to the Central Limit Theorem.

References[edit]

- Jump up ↑ A Study of Rough Amplitude Quantization by Means of Nyquist Sampling Theory, B Widrow, IRE Transactions on Circuit Theory, CT-3(4), 266-276, (1956).

- Jump up ↑ On the autocorrelation function of quantized signal plus noise, E. Banta, Information Theory, IEEE Transactions on, 11, 114 - 117, (1965).

Readout Electronic Unit

analog to digital converter

Data Processing Unit

(Planck) High Frequency Instrument

Cosmic Microwave background