Difference between revisions of "Ground Segment and Operations"

(→The HFI instrument operations and data-processing centre) |

|||

| (2 intermediate revisions by one other user not shown) | |||

| Line 47: | Line 47: | ||

* testing and operating the PLA. | * testing and operating the PLA. | ||

| − | === The LFI instrument | + | === The LFI instrument Operations and Data Processing Centre === |

| − | The LFI instrument | + | The LFI instrument Operations and Data Processing Centre, |

located at the Osservatorio Astronomico di Trieste (Italy), is responsible for the operation of the LFI instrument, and the processing of the data acquired by LFI into the final scientific products of the mission. | located at the Osservatorio Astronomico di Trieste (Italy), is responsible for the operation of the LFI instrument, and the processing of the data acquired by LFI into the final scientific products of the mission. | ||

| Line 111: | Line 111: | ||

successfully recovered all the data. | successfully recovered all the data. | ||

| − | An operational principle of the mission was to avoid impact on the nominal science of a completely missed ground station pass. Commanding continuity was managed by keeping more than 24 h of commanding-timeline queued on-board. The telemetry resided on board the satellite in a | + | An operational principle of the mission was to avoid impact on the nominal science of a completely missed ground station pass. Commanding continuity was managed by keeping more than 24 h of commanding-timeline queued on-board. The telemetry resided on board the satellite in a roughly 60 h circular buffer in solid-state memory, and could be recovered subsequently using the margin in each pass, or more rapidly by seeking additional station coverage after an event. The lost-pass scenario in fact occurred only once (on 21 December 2009), when snow on the dish at Cebreros led to the loss of the entire pass. A rapid recovery was made by using spare time available on the New Norcia station. Smaller impacts on the pass occurred more often (e.g., the first approximately 10 min of a pass being lost due to a station acquisition problem) and these could normally be recovered simply by restarting a software task or rebooting station equipment. Such delays were normally accommodated within the margin of the pass itself, or during the subsequent pass. |

All the data downloaded from the satellite, and processed | All the data downloaded from the satellite, and processed | ||

products such as filtered attitude information, were made available | products such as filtered attitude information, were made available | ||

each day for retrieval from the MOC by the LFI and HFI | each day for retrieval from the MOC by the LFI and HFI | ||

| − | data processing centres (DPCs). Typically, the data arrived at the LFI ( | + | data processing centres (DPCs). Typically, the data arrived at the LFI (or HFI) DPC 2 (4) hours after the start of the daily acquisition window. Automated processing of the incoming telemetry was carried out each day by the LFI (HFI) DPCs and yielded a daily data quality report which was made available to the rest of the ground segment typically 22 (14) hours later. More sophisticated processing of the data in each of the two DPCs is described in Zacchei et al. (2011) and Planck HFI Core Team (2011b). |

== Launch == | == Launch == | ||

| Line 286: | Line 286: | ||

| − | |||

[[Category:PSOBook]] | [[Category:PSOBook]] | ||

Latest revision as of 09:23, 10 July 2018

Contents

The Planck Ground Segment[edit]

A high-level description of the Planck Ground Segment and the conduct of operations can be found in Operations Scenario[1]. A short summary follows in this section.

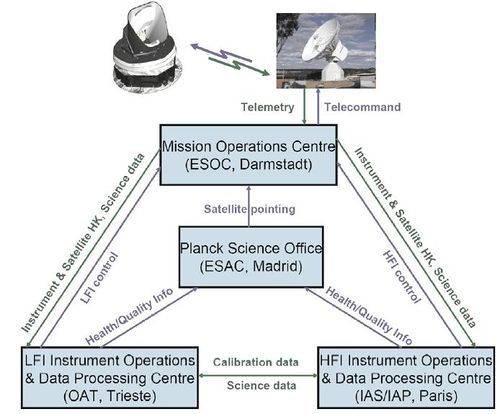

The Planck Ground Segment consisted of four geographically distributed centres.

The Mission Operations Centre[edit]

The mission operations centre (MOC), located at ESA’s operations centre in Darmstadt (Germany), was responsible for all aspects of flight control and of the health and safety of the Planck spacecraft, including both instruments. It planned and executed all necessary satellite activities, including instrument commanding requests by the instrument operations centres.

MOC communicated with the satellite using ESA’s 35-m antenna located in New Norcia (Australia), or that in Cebreros (Spain), over a daily 3-h period, during which it uplinked a scheduled activity timeline which was autonomously executed by the satellite, and downlinked the science and housekeeping (HK) data acquired by the satellite during the past 24 h. The downlinked data were transferred from the antenna to the MOC over a period of typically 8 h; at MOC they were put onto a data server from where they were retrieved by the two data processing centres.

The Planck Science Office[edit]

The Planck Science Office (PSO) is located at ESA’s European Space Astronomy Centre in Villanueva de la Cañada surroundings (Madrid, Spain).

Its main responsibilities include:

- coordinating scientific operations of the Planck instruments;

- designing, planning and executing the Planck observation strategy;

- providing MOC with a detailed pointing plan with a periodicity of about one month;

- creating and updating the specifications of the Planck Legacy Archive (PLA) developed by the Science Archives Team at ESAC;

- testing and operating the PLA.

The LFI instrument Operations and Data Processing Centre[edit]

The LFI instrument Operations and Data Processing Centre, located at the Osservatorio Astronomico di Trieste (Italy), is responsible for the operation of the LFI instrument, and the processing of the data acquired by LFI into the final scientific products of the mission.

The HFI instrument Operations and Data Processing Centre[edit]

The HFI instrument Operations Centre and Data Processing Centre, located, respectively, at the Institut d’Astrophysique Spatiale in Orsay (France) and at the Institut d’Astrophysique de Paris (France), are similarly responsible for the operation of the HFI instrument, and (with several other institutes in France and the UK) for the processing of the data acquired by HFI into the final scientific products of the mission.

Data flow in the Planck Ground Segment[edit]

Uplink[edit]

The definition of the Planck Scanning Strategy was under the responsibility of the PSO, and implemented via Pre-Programmed Pointing Lists (PPL) which were provided periodically to the MOC. The Flight Dynamics team at MOC adapted these into Augmented Pre-Programmed Pointing Lists (APPL) which took into account practical constraints such as ground station scheduling, Operational Day (OD) boundaries and other issues.

MOC sent pointing and instrument commands to the spacecraft, and received the house-keeping telemetry and science data through the ground stations (for Planck, mostly the New Norcia and Cebreros ones).

Downlink[edit]

The Planck satellite generated (and stored on-board) data continuously at the following typical rates: 21 kilobit/s (kbps) of house-keeping (HK) data from all on-board sources, 44 kbps of LFI science data and 72 kbps of HFI science data. The data were brought to ground in a daily pass of approximately 3 h duration. Besides the data downloads, the passes also acquired realtime HK and a 20 min period of real-time science (used to monitor instrument performance during the pass). Planck utilized the two ESA deep-space ground stations in New Norcia (Australia) and Cebreros (Spain). Scheduling of the daily telecommunication period was quite stable, with small perturbations due to the need to coordinate the use of the antenna with other ESA satellites (in particular Herschel).

At the ground station the telemetry was received by redundant chains of front-end/back-end equipment. The data flowed to the mission operations control centre (MOC) located at ESOC in Darmstadt (Germany), where they were processed by redundant mission control software (MCS) installations and made available to the science ground segment. To reduce bandwidth requirements between the station and ESOC only one set of science telemetry was usually transferred. Software was run post-pass to check the completeness of the data. This software check was also used to build a catalogue of data completeness, which was used by the science ground segment to control its own data transfer process. Where gaps were detected, attempts to fill them were made as an offline activity (normally next working day), the first step being to attempt to reflow the relevant data from station. Early in the mission these gaps were more frequent, with some hundreds of packets affected per week (impact on data return of order 50 ppm), due principally to a combination of software problems with the data ingestion and distribution in the MCS, and imperfect behaviour of the software gap check. Software updates implemented during the mission improved the situation, such that gaps became much rarer, with a total impact on data return well below 1 ppm.

Redump of data from the spacecraft was attempted when there had been losses in the space link. This was only necessary on very few occasions. In each case the spacecraft redump successfully recovered all the data.

An operational principle of the mission was to avoid impact on the nominal science of a completely missed ground station pass. Commanding continuity was managed by keeping more than 24 h of commanding-timeline queued on-board. The telemetry resided on board the satellite in a roughly 60 h circular buffer in solid-state memory, and could be recovered subsequently using the margin in each pass, or more rapidly by seeking additional station coverage after an event. The lost-pass scenario in fact occurred only once (on 21 December 2009), when snow on the dish at Cebreros led to the loss of the entire pass. A rapid recovery was made by using spare time available on the New Norcia station. Smaller impacts on the pass occurred more often (e.g., the first approximately 10 min of a pass being lost due to a station acquisition problem) and these could normally be recovered simply by restarting a software task or rebooting station equipment. Such delays were normally accommodated within the margin of the pass itself, or during the subsequent pass.

All the data downloaded from the satellite, and processed products such as filtered attitude information, were made available each day for retrieval from the MOC by the LFI and HFI data processing centres (DPCs). Typically, the data arrived at the LFI (or HFI) DPC 2 (4) hours after the start of the daily acquisition window. Automated processing of the incoming telemetry was carried out each day by the LFI (HFI) DPCs and yielded a daily data quality report which was made available to the rest of the ground segment typically 22 (14) hours later. More sophisticated processing of the data in each of the two DPCs is described in Zacchei et al. (2011) and Planck HFI Core Team (2011b).

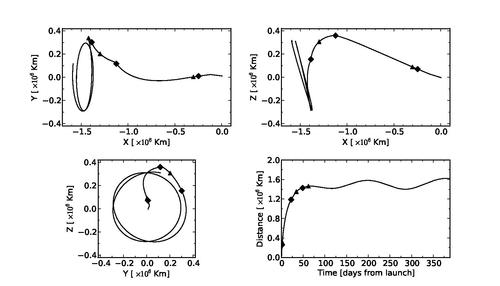

Launch[edit]

Planck was launched from the Centre Spatial Guyanais in Kourou (French Guyana) on 14 May 2009 at its nominal lift-off time of 13:12 UT, on an Ariane 5 ECA rocket of Arianespace. ESA’s Herschel observatory was launched on the same rocket. At 13:37:55 UT, Herschel was released from the rocket at an altitude of 1200 km; Planck followed suit at 13:40:25UT. The separation attitudes of both satellites were within 0.1° of prediction. The Ariane rocket placed Planck with excellent accuracy (semimajor axis within 1.6% of prediction), on a trajectory towards the second Lagrangian point of the Earth-Sun system (L2). The orbit describes a Lissajous trajectory around L2 with a roughly six month period that avoids crossing the Earth penumbra for at least four years.

A short description of the launch and Low-Earth-Orbit operations is available in Feucht & Gienger 2010[2].

Early operations and transfer to final orbit[edit]

After release from the rocket, three large manoeuvres were carried out to place Planck in its intended final orbit. The first (14.35 m s−1), intended to correct for errors in the rocket injection, was executed on 15 May at 20:01:05 UT, with a slight over-performance of 0.9% and an error in direction of 1.3° (a touch-up manoeuvre was carried out on 16 May at 07:17:36 UT). The second and major (mid-course) manoeuvre (153.6 m s−1) took place between 5 and 7 June, and a touch-up (11.8 m s−1) was executed on 17 June. The third and final manoeuvre (58.8 m s−1), to inject Planck into its final orbit, was executed between 2 and 3 July. The total fuel consumption of these manoeuvres, which were carried out using Planck’s coarse (20 N) thrusters, was 205 kg.

Once in its final orbit, very small manoeuvres were required at approximately monthly intervals (1 m s-1 per year) to keep Planck from drifting away from its intended path around L2. The attitude manoeuvres required to follow the scanning strategy need about 2.6 m/s per year. Overall, the excellent performance of launch and orbit manoeuvres led to a large amount of fuel remaining on board at end of mission operations.

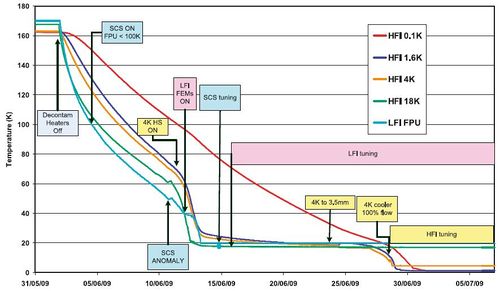

Planck started cooling down radiatively shortly after launch. Heaters were activated to hold the focal plane at 250 K, which was reached around 5 h after launch. The valve opening the exhaust piping of the dilution cooler was activated at 03:30 UT, and the 4He-JT cooler compressors were turned on at low stroke at 05:20 UT. After these essential operations were completed, on the second day after launch, the focal plane temperature was allowed to descend to 170 K for out-gassing and decontamination of the telescope and focal plane.

Initial science operations[edit]

Commissioning[edit]

The first period of operations focussed on commissioning activities, i.e., functional check-out procedures of all sub-systems and instruments of the Planck spacecraft, in preparation for running science operations related to calibration and performance verification of the payload. Planning for commissioning operations was driven by the telescope decontamination period of 2 weeks and the subsequent cryogenic cool-down of the payload and instruments. The overall duration of the cool-down was approximately 2 months, including the decontamination period.

The sequence of commissioning activities covered the following areas:

- on-board commanding and data management; – attitude measurement and control;

- manoeuvreing ability and orbit control;

- telemetry and telecommand;

- power control;

- thermal control;

- payload basic functionality, including:

- the LFI;

- the HFI;

- the cryogenic chain;

- the Standard Radiation Environment Monitor (SREM);

- the Fibre-Optic Gyro unit (FOG), a piggy-back experiment which is not used as part of the attitude control system.

The commissioning activities were executed very smoothly and

all sub-systems were found to be in good health. The figure below outlines the cool-down sequence indicating when the main instrument-related commissioning activities took place.

The most significant unexpected issues that had to be addressed during these early operational phases were the following.

- The X-band transponder showed an initialisation anomaly during switch-on which was fixed by a software patch.

- Large reorientations of the spin axis were imperfectly completed and required optimisation of the on-board parameters of the attitude control system.

- The data rate required to transmit all science data to the ground was larger than planned, due to the unexpectedly high level of Galactic cosmic rays, which led to a high glitch rate on the data stream of the HFI bolometers (Planck HFI Core Team 2011a); glitches increase the dynamic range and consequently the data rate. The total data rate was controlled by increasing the compression level of a few less critical thermometers.

- The level of thermal fluctuations in the 20-K stage was higher than originally expected. Optimisation of the sorption cooler operation led to an improvement, though they still remained approximately 25% higher than expected (Planck Collaboration 2011b).

- The 20-K sorption cooler turned itself off on 10 June 2009, an event which was traced to an incorrectly set safety threshold.

- A small number of sudden pressure changes were observed in the 4He-JT cooler during its first weeks of operation, and were most likely due to impurities present in the cooler gas (Planck Collaboration 2011b). The events disappeared after some weeks, as the impurities became trapped in the cooler system.

- The 4He JT cooler suffered an anomalous switch to standby mode on 6 August 2009, following a current spike in the charge regulator unit which controls the current levels be- tween the cooler electronics and the satellite power supply (Planck Collaboration 2011b). The cooler was restarted 20 hrs after the event, and the thermal stability of the 100 mK stage was recovered about 47 hrs later. The physical cause of this anomaly was not found, but the problem has not recurred.

- Instabilities were observed in the temperature of the 4He-JT stage, which were traced to interactions with lower temperature stages, similar in nature to instabilities observed during ground testing (Planck Collaboration 2011b). They were fixed by exploring and tuning the operating points of the multiple stages of the cryo-system.

- The length of the daily telecommunications period was in- creased from 180 to 195 min to improve the margin available and ensure completion of all daily activities. Subsequent optimisations of operational procedures allowed the daily contact period to be reduced again to 3 h.

The commissioning activities were formally completed at the time when the HFI bolometer stage reached its target temperature of 100 mK, on 3 July 2009 at 01:00 UT. At this time all the critical resource budgets (power, fuel, lifetime, etc.) were found to contain very significant margins with respect to the original specification.

Calibration and performance verification[edit]

Calibration and performance verification (CPV) activities started during the cool-down period and continued until the end of August 2009.

Their objectives were to:

- verify that the instruments were optimally tuned and their performance characterised and verified;

- perform all tests and characterisation activities which could not be performed during the routine phase;

- characterise the spacecraft and telescope characteristics of relevance for science (detailed optical characterisation requires the observation of planets, which first came into the field-of-view in October 2009, i.e., after the start of routine operations);

- estimate the lifetime of the cryogenic chain.

CPV activities addressed the following areas:

- tuning and characterisation of the behaviour of the cryogenic chain;

- characterisation of the thermal behaviour of the spacecraft and payload;

- for each of the two instruments: tuning; characterisation and/or verification of performance (in the case of LFI, an optimisation of the detector parameters was carried out in-flight - Mennella et al. 2011, whereas for HFI, it was merely verified that the on-ground settings had not changed -Planck HFI Core Team 2011)), calibration (including thermal, RF, noise and stability, optical response); and data compression properties;

- determination of the focal plane footprint on the sky;

- verification of scanning strategy parameters;

- characterisation of systematic effects induced by the spacecraft and the telescope, including:

- dependence on solar aspect angle;

- dependence on spin;

- interference from the RF transmitter; – straylight rejection;

- pointing performance.

The schedule of CPV activities consumed about two weeks longer than initially planned, mainly due to:

- the anomalous switch to standby mode of the 4He-JT cooler on 6 August (costing 6 days until recovery);

- instabilities in the cryo-chain,which required the exploration of a larger parameter phase space to find an optimal setting point;

- additional measurements of the voltage bias space of the LFI radiometers, which were introduced to optimise its noise performance, and led to the requirement of artificially slowing the natural cool-down of the 4He-JT stage.

A more detailed description of the relevant parts of these tests can be found in Mennella et al. (2011) and Planck HFI Core Team (2011a).

On completion of all the planned activities, it was concluded that:

- the two instruments were fully tuned and ready for routine

operations. No further parameter tuning was expected to be needed, except for the sorption cooler, which required a weekly change in operational parameters.

- The scientific performance parameters of both instruments

were in most respects as had been measured on the ground before launch. The only significant exception was that, due to the high level of Galactic cosmic rays, the bolometers of HFI were detecting a higher number of glitches than expected, causing a modest (about 10%) increase of systematic effects on their noise levels.

- the telescope survived launch and cool-down in orbit without any major distortions or changes in its alignment;

- the lifetime of the cryogenic chain was adequate to carry the mission to its foreseen end of operations in November 2010, with a margin of order one year;

- the pointing performance was better than expected, and no changes to the planned scanning strategy were required;

- The satellite did not introduce any major systematic effects into the science data. In particular, the telemetry transponder did not result in radio-frequency interference, which implies that the data acquired during visibility periods is usable for science.

First-Light Survey[edit]

The First Light Survey (FLS) was the last major activity planned before the start of routine surveying of the sky. It was conceived as a two-week period during which Planck would be fully tuned up and operated as if it was in its routine phase. This stable period could have resulted in the identification of further tuning activities required to optimise the performance of Planck in the long-duration surveys to come. The FLS was conducted between 13 and 27 August, and in fact led to the conclusion that the Planck payload was operating stably and optimally, and required no further tuning of its instruments. Therefore the period of the FLS was accepted as a valid part of the first Planck survey.

References[edit]

- ↑ Planck Operations Scenario, Planck Operations Scenario V1.0

- ↑ Feucht & Gienger 2010

[ESA's] Mission Operation Center [Darmstadt, Germany]

European Space Agency

House Keeping

Planck Science Office

Planck Legacy Archive

(Planck) Low Frequency Instrument

(Planck) High Frequency Instrument

Pre-programmed Pointing List

Augmented Preprogrammed Pointing List

Operation Day definition is geometric visibility driven as it runs from the start of a DTCP (satellite Acquisition Of Signal) to the start of the next DTCP. Given the different ground stations and spacecraft will takes which station for how long, the OD duration varies but it is basically once a day.

European Space Operations Centre (Darmstadt)

Data Processing Center

Space Radiation Environment Monitor

Fiber Optic Gyroscope

Sorption Cooler Subsystem (Planck)

LFI cryogenic amplifying stage Front End Module

Focal Plane Unit

Calibration and Performance Verification